Almost Timely News: 🗞️ You Can’t Reasonably Measure AI Brand Strength (2024-08-18) :: View in Browser

👉 Register for my upcoming free webinar, Generative AI for Hospitality!

Content Authenticity Statement

90% of this week’s newsletter was generated by me, the human. You’ll see some AI outputs in the opening section. Learn why this kind of disclosure is a good idea and might be required for anyone doing business in any capacity with the EU in the near future.

Watch This Newsletter On YouTube 📺

Click here for the video 📺 version of this newsletter on YouTube »

Click here for an MP3 audio 🎧 only version »

What’s On My Mind: You Can’t Reasonably Measure AI Brand Strength

Be very cautious of AI search results tools.

Not that it’s a bad idea to think about how your brand is positioned within language models like GPT-4o (the model that powers ChatGPT today), Claude, or Gemini, but because it’s a nearly impossible task to make that determination.

If You Don’t Feel Like Getting Into The Weeds

Today’s newsletter is going to be very technical so here’s the TL;DR:

Using “AI optimization” tools to determine your “AI SEO” is a bit of a fool’s errand because AI models do not work the way search engines do. If you want to influence AI models, the best thing you can do is publish very high volumes of very high quality content – in other words, traditional SEO. The main difference is that instead of links as the gold standard of measurement, it’s appearances around the public web.

That’s it. Go home. See you next week.

…now, if you’d like to stick around for the detailed explanation, grab your libation of choice, find a comfortable position, and let’s dig into the weeds.

Part 1: How AI Models Work

To learn why trying to measure our findability in AI models is a bit of a fool’s errand, we first need to know how these things work. As a reminder, a model is the engine of today’s generative AI tools. Services like ChatGPT use models like GPT-4omni under the hood.

When these models are built by deep-pocketed technology companies, they’re trained on massive amounts of text data, 7-10 petabytes of text, mostly extracted from the public web. To give you a rough sense of how much text that is, all of William Shakespeare’s plays fit inside roughly 5.4 megabytes. 7 petabytes is 1.3 billion copies of Shakespeare’s works. If each volume is about 3 inches tall, 7 petabytes is a stack of Shakespeare’s books that circles the equator twice.

That’s how much text you need to train today’s models. It’s also why only a few companies can make state of the art foundation models – it costs millions of dollars in time and hardware just to digest that amount of text.

The first part of the process is breaking down words into tokens, little three and four letter fragments of words.

Then, every token is turned into a number.

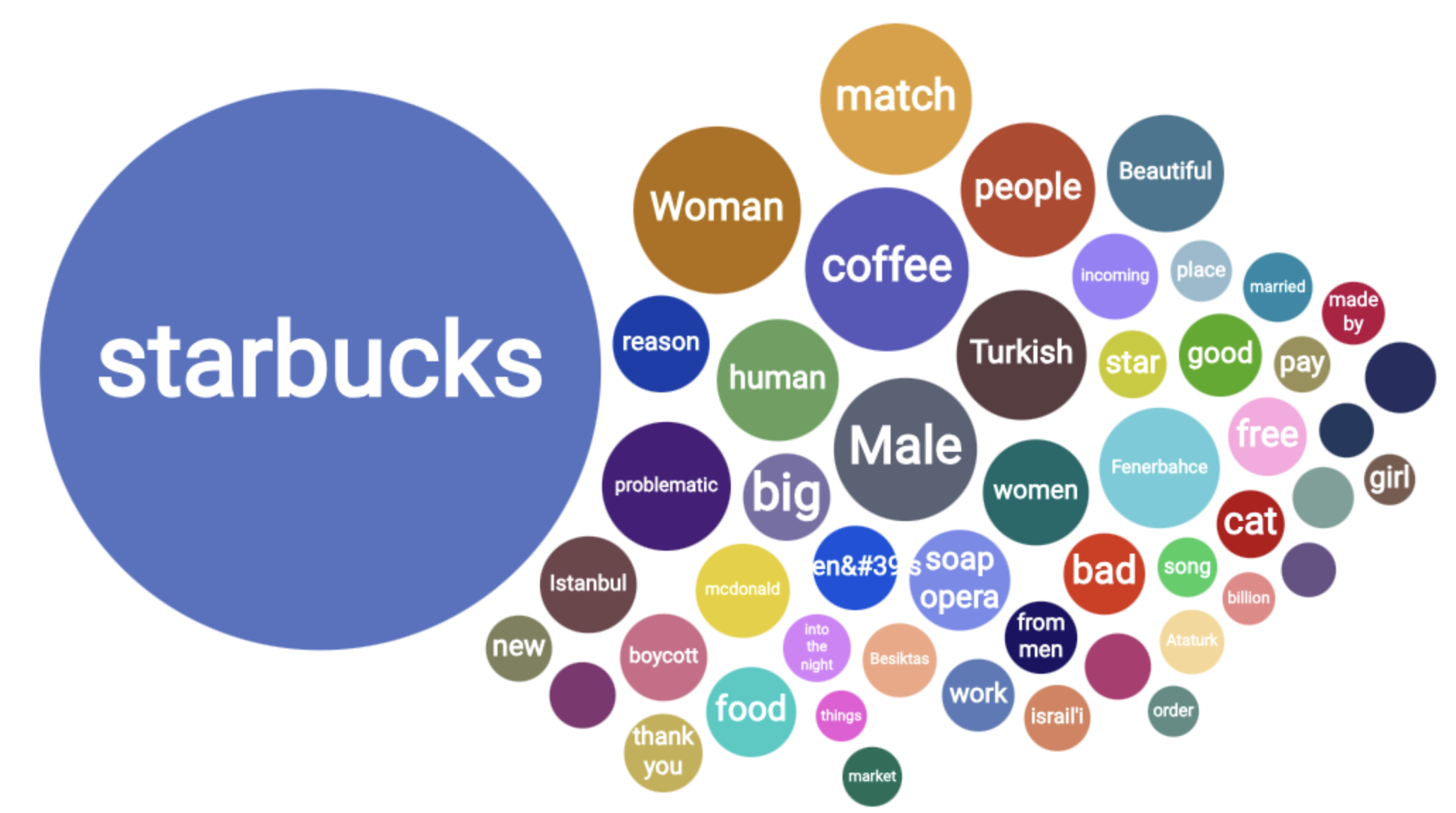

Then, every token’s position is compared to every other token’s position in a massive statistical database. That’s what is under the hood in every large language model: a big pile of statistics. The way I recommend people think about this conceptually is to think about word clouds. In any given piece of text, what’s the word cloud for EVERY WORD in that text? That’s essentially what’s going on, conceptually. (Mathematically, that’s not true but no one wants to do linear algebra in a newsletter)

When we ask a model something, using a prompt, the model breaks down our question into tokens, then goes into its library and fetches all the word clouds it can find that match those tokens.

For example, suppose you looked at all the word clouds on the Internet around the term Starbucks. You would see words like coffee and tea. You would also see thousands of other words. That’s what is inside a large language model. When we give a model a prompt, what comes back are the most related words (actually tokens, but words is fine for this conceptually) that the model maker’s software has seen all over the Internet.

This is why attempting to measure a brand’s presence in a language model is a bit of a fool’s errand. It’s not like regular SEO, where you can see how web pages relate to each other through links. Instead, every part of every word is related to every part of every other word, phrase, sentence, paragraph, and document.

Part 2: How People Behave

The technical obstacles to measurement are tough enough to deal with. However, there’s an additional wrinkle. In old school SEO, we pay attention to things like words. You’ll still see things like keyword lists floating around, and keyword planning tools. Those are fine when you’re measuring links and checking the text of words in links as a way to determine what a page is authoritative about.

All that goes out the window in generative AI, because that’s not how people talk to AI. People don’t type in things like:

“Trust Insights consulting” or “management consulting firm”

They ask real questions and have real conversations: “Give me a list of recommended management consulting firms that specialize in healthcare in the Boston area,” and “Who is Trust Insights and what do they do?” And “What’s a good recipe for guacamole for people who are allergic to citrus?”

You might think, “Oh, then we can just ask common questions and measure how often we come up in responses, right?”

Except, going back to part 1, every word gets tokenized when we prompt a model. The more words, the more tokens, and the more specific and clear the response… and the harder it is to predict what people themselves are going to type into an AI model, and the more likely it is the model is going to return different results.

Here’s an example. Suppose I type in:

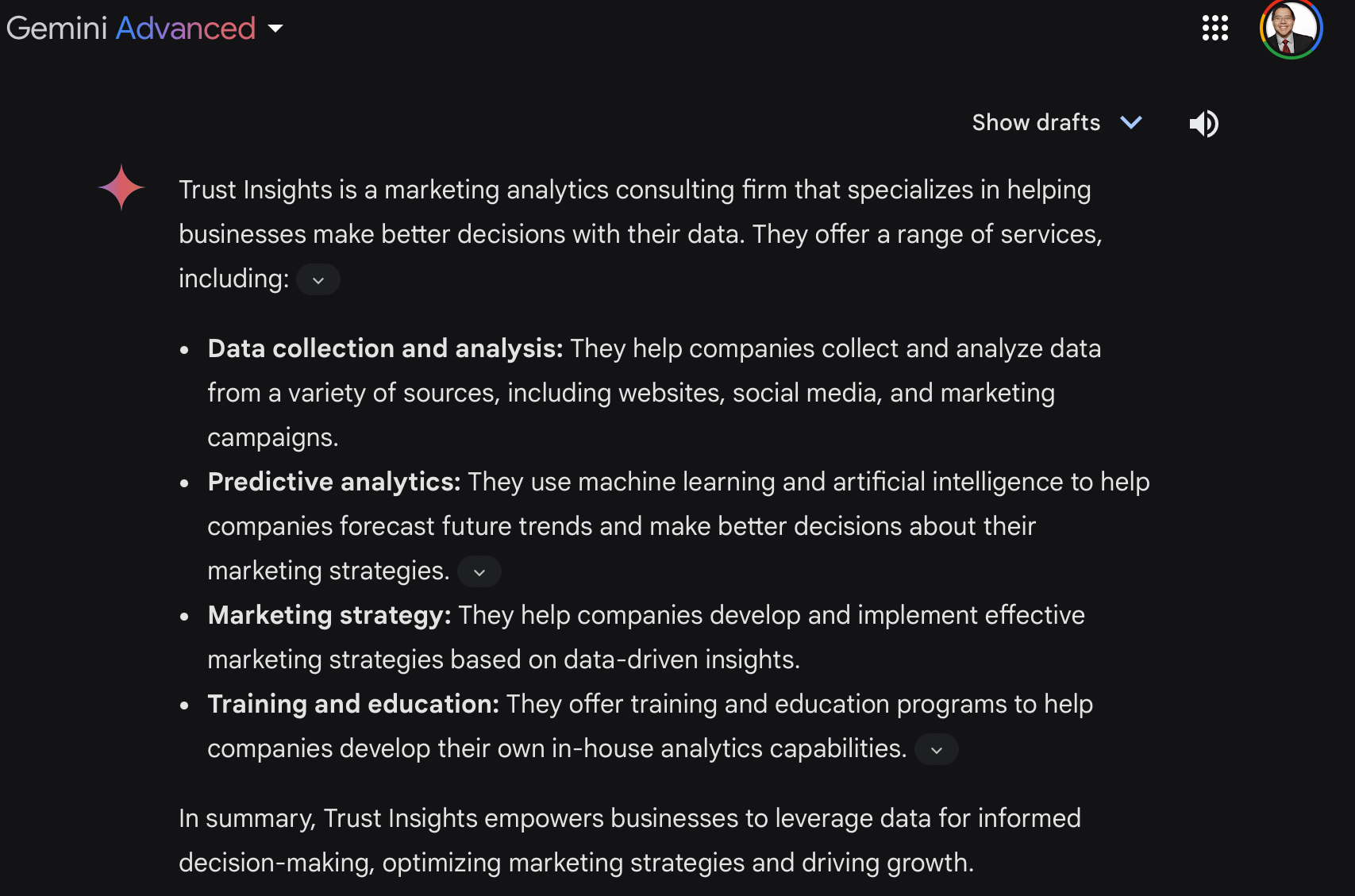

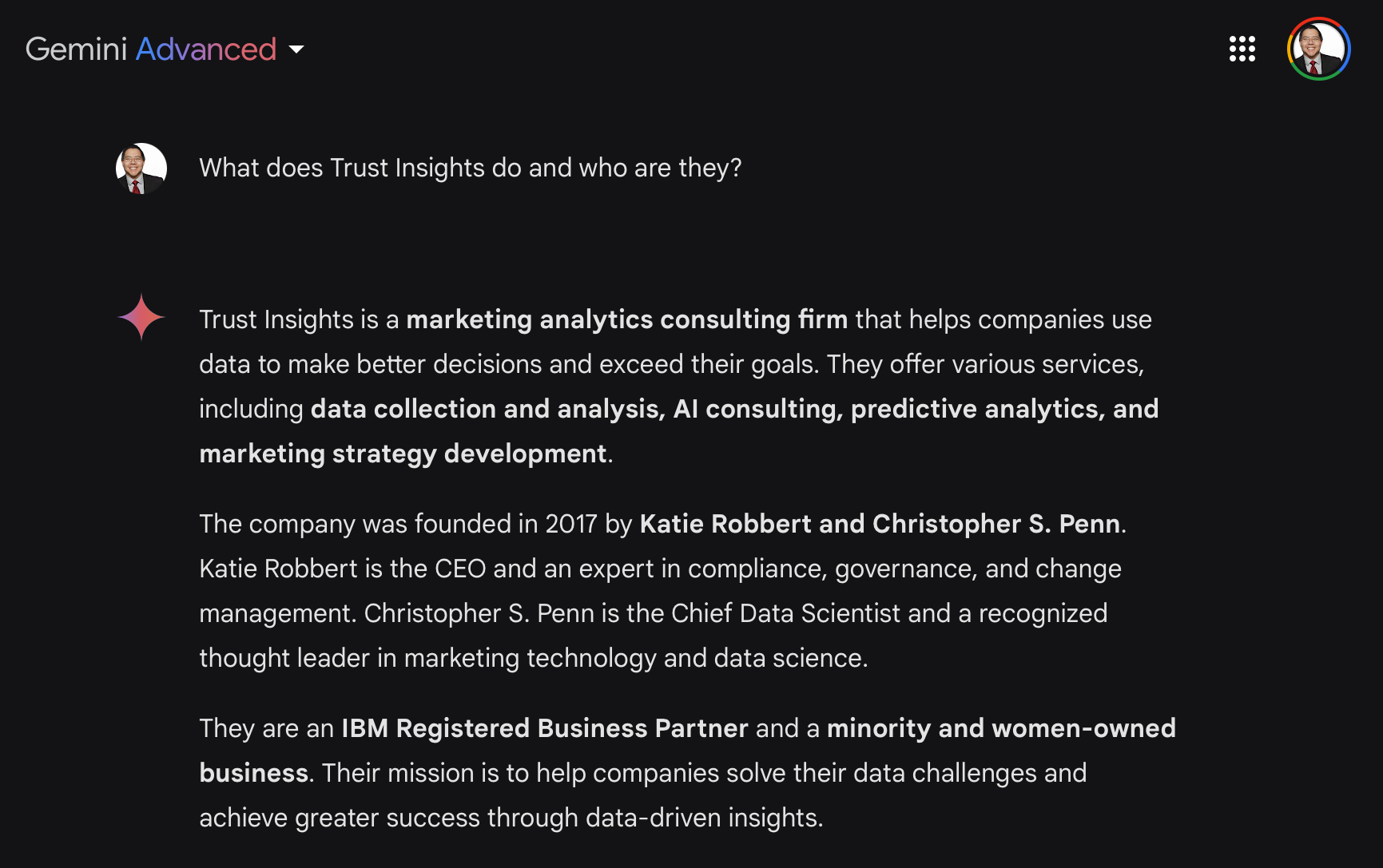

“Who is Trust Insights and what do they do?”

I’ll get a response from Google’s Gemini model that looks like this:

Now, suppose I type:

“What does Trust Insights do and who are they?”

Before we look at the results, it should be clear that these are basically the same question. We should get basically the same answer, right?

Nope.

These are wildly different answers. Both are factually correct, but you could not easily predict what the answers would be. If you’re trying to measure even whether or not your brand is in an AI model, you can see how complicated this could get.

Why does this happen? Remember that the way these models work, they take into account the order in which words appear when they’re first trained.

The order of words matters. In search engines, in traditional search, word order matters very little because it’s a much different language technology. In language models, word order and even seemingly unimportant words can trigger different token activations and lead to very different results.

Imagine how problematic this becomes when an informational query like “Recommend some top consulting firms in the Boston area” and “Recommend some Boston area top consulting firms” yields totally different results. Good luck measuring your brand presence on every possible way of wording informational queries.

Part 3: A Cornucopia of Models

Okay, so the conversational nature of generative AI means it’s very, very difficult to predict what an AI model will even say, much less measure our brand strength in it. Let me add an even bigger wrinkle.

There are many, many more models in generative AI than there are in traditional search. In traditional search, you mainly have to worry about ranking well in Google. In generative AI, you have a much, much wider field. Here are just a few:

- Google’s Gemini powers Gemini (the app) as well as Google’s AI Answers and every other place they try to stuff Gemini.

- GPT-4omni powers ChatGPT and the many, many companies that have built their infrastructure on OpenAI’s platform, like Hubspot’s ChatSpot.

- Anthropic’s Claude models power a variety of writing apps as well as their own web app.

- Meta’s Llama family of models power Meta AI and the AI search in all of Meta’s apps like Facebook, Instagram, WhatsApp, and Messenger.

- Mistral’s family of models power apps and services around the world but especially in the EU.

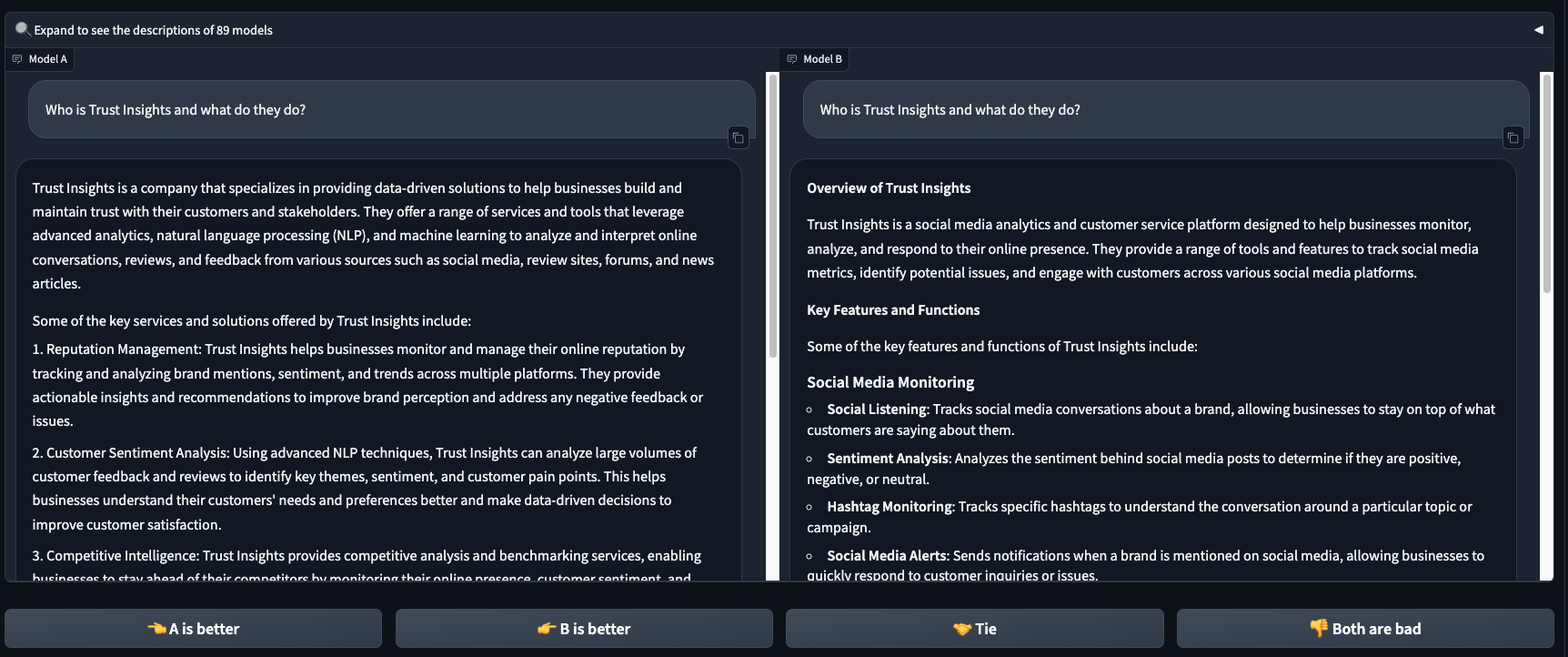

And there are many, many, many more. You can see and test the top 89 (yes, 89) in LMsys’ Chatbot Arena:

Any company or tool saying they can measure your brand’s presence in AI had better be able to query at least these major models, not to mention the tens of thousands of smaller models that are in production, like IBM’s Granite in WatsonX.

In fact, IBM WatsonX is a great example of how challenging even answering the question of AI presence can be. People building enterprise apps on that platform can choose from over a dozen different models. If you want to influence large enterprises using the WatsonX system, you’d need to influence each of those models.

If any company or piece of software is telling they can measure your brand’s presence in an AI model, and it can return that result in less than a day, they’re lying. There’s no kind way to put it. They’re lying. You would need to query dozens, if not hundreds of different ways of asking a model questions about your brand, tens of thousands of different ways to ask questions about your industry or niche, and ask those questions of at least half a dozen, if not more models. Chatbot Arena, one of the top sites for testing and measuring models, has 89 top models to test. Even fully automated, you’re not getting that answer in under a day, and certainly not for free. The compute costs alone would be in the hundreds of dollars just for one model.

Part 4: Ground Truth

Most generative AI services that offer consumer interfaces have started creating grounding in their software; that is, the software gets answers from the model and then essentially fact checks them with website data, typically from search engines. Meta’s AI model does this. ChatGPT does this. Google’s Gemini does this.

Which means… while people may not necessarily use search engines the way they used to or as much as they used to, optimizing your content for search engines is still vitally important. Showing up in search engines means that when an AI model is unsure about its answer, if the model has the ability to call tools (like web searches), it’s going to ask the nearest search engine.

Those sites and pages that do well in legacy, traditional search will be the points of reference for AI models that are checking their own work, which is a polite way of telling you not to fire your SEO team just yet.

Part 5: So What Do We Do?

Let’s get to the So What? as Katie Robbert likes to say. Okay, we effectively cannot measure our brand presence in an AI model because the architecture of the model simply makes that infeasible.

But what we can do, what we know how to do, is create content on the Internet that’s available for models to learn from. Almost every AI company making models uses some or all of the Common Crawl archive of public web pages as the base data for its model training. Common Crawl is a regularly updated massive archive of text, 6.2 petabytes as of the last crawl, and is something you can – if you have the disk space – inspect yourself to see what’s inside. You can download portions of it and see what part of your website has been scraped. You can see what news outlets, what blogs, what podcasts, etc. are inside their archive – and by extension, inside the training data for language models.

We can and should be creating high quality, high volume content everywhere we can. Make YouTube videos at scale – we know for sure companies like NVIDIA have scraped millions of hours of YouTube video for their model training. I am almost 100% confident OpenAI has scraped the same from YouTube, because in their voice transcription model, Whisper, it recognizes and tags my voice in audio

We should be creating content in all the places we know scrapers go, and AI models go to obtain training data. Github. StackExchange. Reddit. YouTube. Local news sites. Substack. Various social networks.

And finally, but probably most important, we should be building brand like crazy. You should be building your personal brand by being in as many places as you feasibly can be. You should be publishing guest content wherever you can. You should be on every podcast that will have you. You should be joining livestreams and being on any YouTube channel you can be.

We want models to train on our names and our topics from as much text as possible, which means we need to create that text in as many places on the Internet as we can. However, instead of chasing inbound links as we used to in SEO, now we’re just chasing placements, period. Believe it or not, this is where public relations firms and teams could make a huge difference for their companies. The best firms and teams excel at getting media placements, so their strategy just has to pivot from “we want top tier media” to “we’ll take any media we can get”.

You Can’t Reasonably Measure AI Brand Strength

The bottom line is this: yes, you can measure your brand’s placement in AI, but it’s a time-intensive, compute-intensive, very expensive process to do so if you do it well. (it’s free and easy if you want inaccurate, incorrect answers)

However, you can absolutely measure the impact of AI on your business using the gold standard of measurement: asking people how they learned about you. There is no substitute for this, there never has been, and when done properly, will always be a timeless, valuable source of marketing attribution.

If you’d still like to try measuring brand strength in AI and feel like lighting a lot of money on fire, my company Trust Insights will try to talk you out of that but will still do it if you absolutely insist..

How Was This Issue?

Rate this week’s newsletter issue with a single click. Your feedback over time helps me figure out what content to create for you.

Share With a Friend or Colleague

If you enjoy this newsletter and want to share it with a friend/colleague, please do. Send this URL to your friend/colleague:

https://www.christopherspenn.com/newsletter

For enrolled subscribers on Substack, there are referral rewards if you refer 100, 200, or 300 other readers. Visit the Leaderboard here.

Advertisement: Bring Me In To Speak At Your Event

Elevate your next conference or corporate retreat with a customized keynote on the practical applications of AI. I deliver fresh insights tailored to your audience’s industry and challenges, equipping your attendees with actionable resources and real-world knowledge to navigate the evolving AI landscape.

If you’d like to see more, here are:

Leave a Reply