Almost Timely News: 🗞️ How to Make Generative AI Sound More Like You (2024-08-25) :: View in Browser

👉 Enjoy my latest on-demand free webinar, Generative AI for Hospitality!

Content Authenticity Statement

80% of this week’s newsletter was generated by me, the human. You’ll see lots of AI outputs in the opening section, plus an AI summary. Learn why this kind of disclosure is a good idea and might be required for anyone doing business in any capacity with the EU in the near future.

Watch This Newsletter On YouTube 📺

Click here for the video 📺 version of this newsletter on YouTube »

Click here for an MP3 audio 🎧 only version »

What’s On My Mind: How to Make Generative AI Sound More Like You

This week, let’s tackle one of the most common complaints about content generation using generative AI:

“It doesn’t sound like me.”

Why doesn’t AI sound like you, and what can you do about it?

TL;DR

Don’t feel like reading this week’s issue? Here’s the AI-generated summary, summarized with Google Gemini and complete with very AI-sounding content.

This issue of the newsletter explores the mechanics of how AI writes, emphasizing the influence of training data and the prevalence of common, and often bland, language. It delves into the complexities of writing style, highlighting the numerous components that contribute to an individual’s unique voice. The newsletter then provides a step-by-step guide on how to prompt AI to emulate your style, involving detailed analysis, scoring rubrics, and iterative refinement.

Now, if you’re ready to dig in, grab a beverage, your laptop or computer, and a comfortable chair.

Part 1: How AI Writes

Let’s go back to the basics to review how generative AI systems – language models in particular, like the ones that power ChatGPT and Claude – work. These models are built using massive amounts of text data. As I mentioned in last week’s newsletter, the average model is trained on 7-10 petabytes of text data. That’s a bookshelf full of text-only books that stretches around the equator twice.

Every word is broken down into small word fragments (tokens), and then each token’s position and frequency is measured against every other token, word, phrase, sentence, paragraph, and document. What you end up with after this extremely time-intensive process is a giant database of statistics.

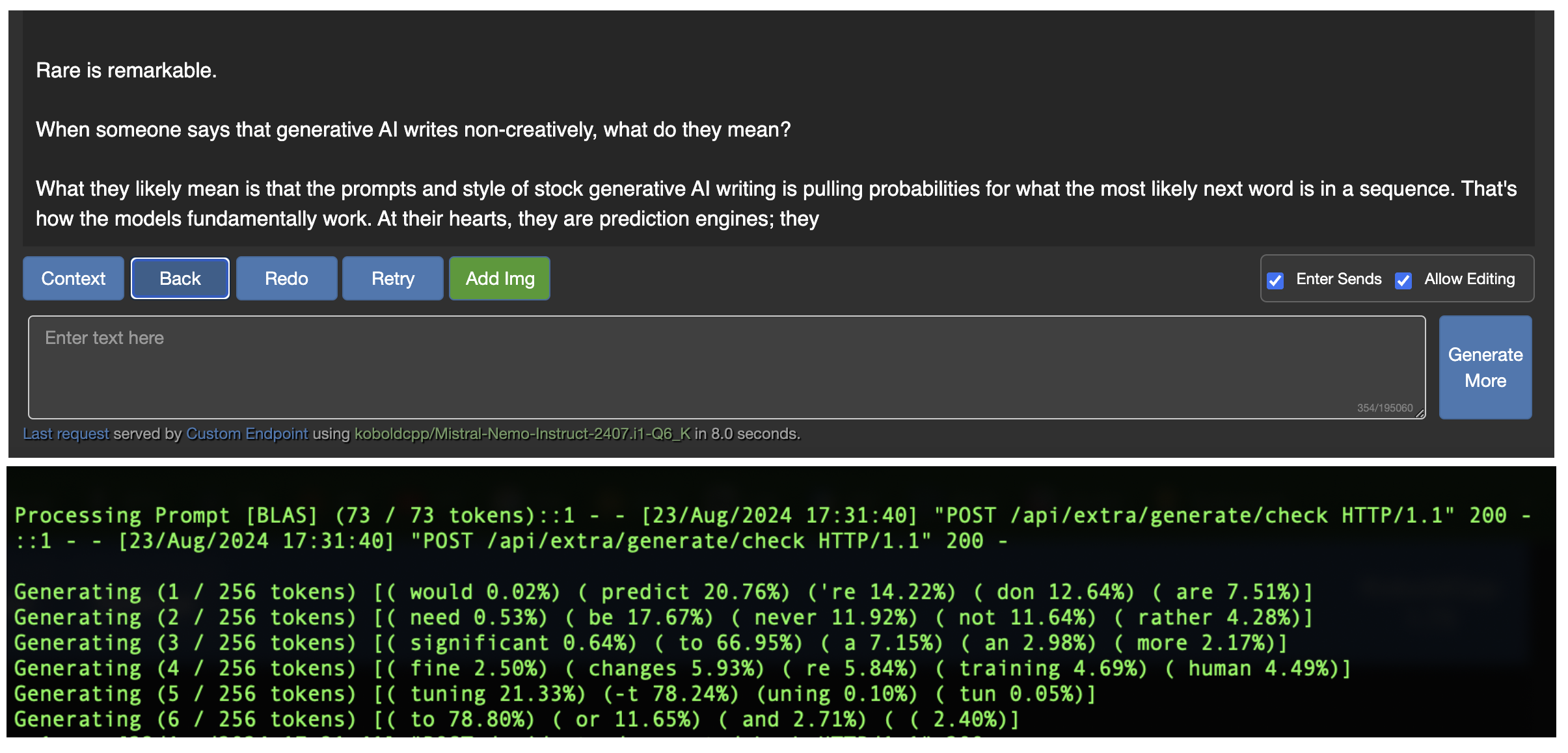

When we prompt AI to generate text with naive, short prompts, it naturally selects the highest probability tokens and assembles them into words. Here’s an example from a LinkedIn post I wrote the other day.

You can see that in this naive example – the prompt was, “continue this LinkedIn post” – it’s choosing the most likely word pieces to assemble.

Here’s the problem: by definition, the most probable words are also the most common. And the most common words are… well, boring.

Take some of the hallmark words of unguided AI-generated text like “delve”, “absolutely!”, and “embark”:

Why are these so frequent in the text AI generates?

Well, because they’re frequent in the text AI was trained on. Think about it for a moment: what’s one of the largest sources of publicly available, free text data? Marketing content. We’ve been generated marketing content at a blistering pace for 25 years, ever since the dawn of SEO and search engines. Company websites are filthy with terms like “embark” and other corporate mumbling. And all that has filtered into the content AI generates.

Here’s an additional gotcha about this kind of language. Remember that AI generates content based on what it’s already seen in any given conversation. If it can tell just from our prompts or a couple of paragraphs of sample information that we’re working on marketing content, the probabilities it chooses will be from… marketing content it’s already seen.

Cue the tsunami of “delves”.

Delve occurs in the style of text we’re writing; if you give generative AI a different kind of starting document or prompt, it’s going to come up with different high-probability words. Give it some purple prose, and you’ll see an avalanche of words and phrases that commonly occur in fan fiction (one of the largest sources of text data from sites like Archive Of Our Own).

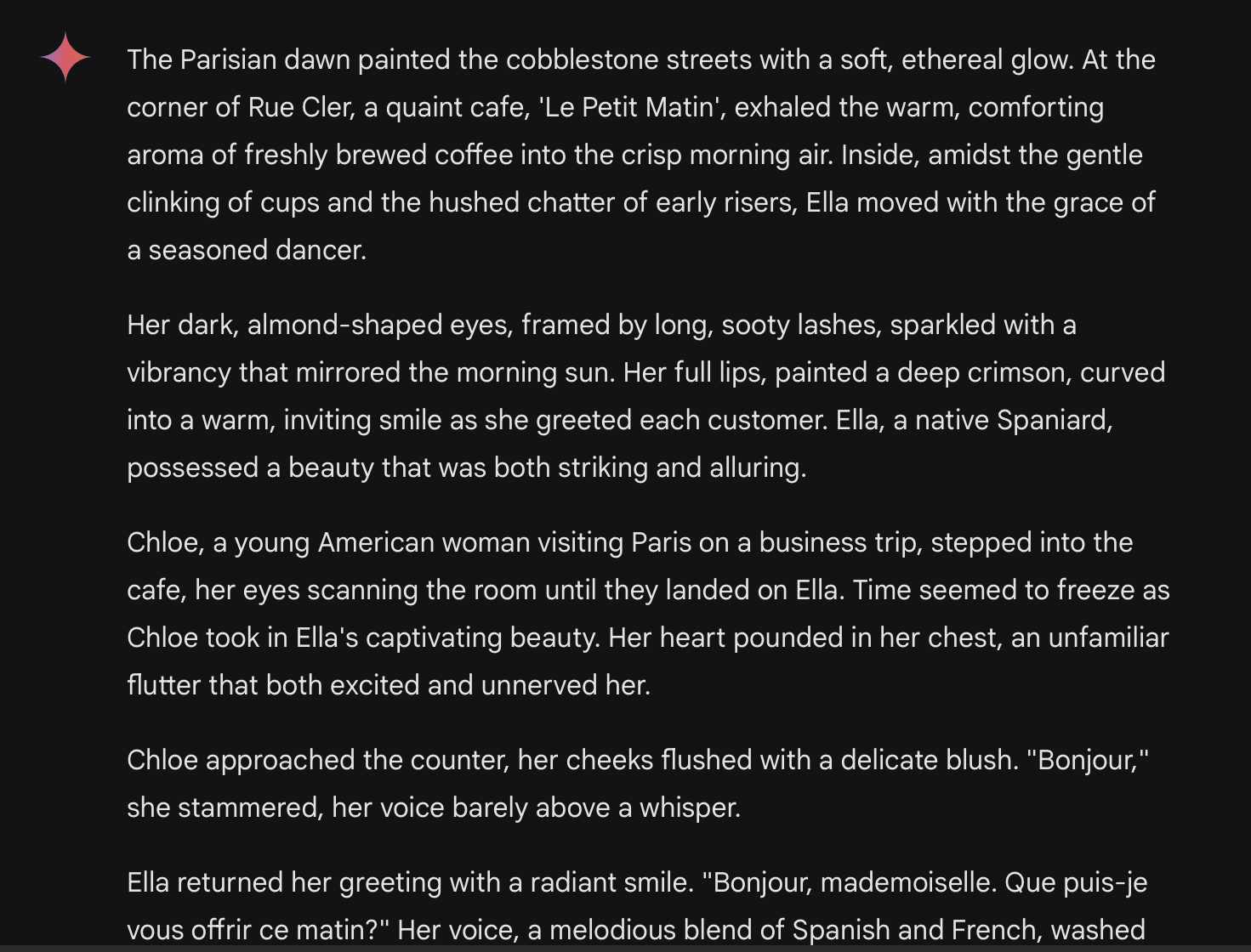

Here’s an example. This is with a very short prompt:

Not a delve in sight. Why? Just from the prompt alone, the language model understands that this isn’t marketing content. Delve isn’t going to show up because in the context of this language, it’s not common.

That’s a key point we’ll come back to. Language models choose words based on the context of the words that have been in the conversation so far.

If we want less boring writing, we need to instruct the language model to generate less common words.

Part 2: Writing Style Basics

What is writing style? How do we know what does and doesn’t sound like us? This is a more complicated question than it first seems, because writing style isn’t a thing. Writing style is an umbrella concept that rolls up dozens of sub-concepts.

For example, diction – word choice – is a huge component of writing style, and one that sets up apart from other writers (including AI). Every individual person’s diction is different because we’ve all been exposed to different language, different words. The words you remember are tied to your emotional experiences in life; even if they’re broadly common words, the order in which you remember them is unique to you.

Don’t believe me? Can you remember the words someone you cared about said that made you fall in love, or broke your heart? Probably. You might even remember the tone of voice they were said in. But that sequence of words is seared into your brain and when you write, it’s part of how you select language. If you’ve ever tried your hand at writing fiction and really gotten into it, you’ve probably noticed that little bits of language that you’ve said or had said to you crept in.

Diction is just one of more than two dozen pieces of writing style, like pacing, tone, clarity, conciseness, sentence length, paragraph length, etc. – all things that identify you individually, like a fingerprint.

This is why it’s so hard for the average user to prompt AI well to write like them. If you can’t articulate the fine details of your own writing style, there’s no way you’re going to be able to tell AI what to do.

Part 3: Prompting AI to Write Like You, Step by Step

So if we want AI to write like us, how do we do that? We have to create a writing environment that helps AI understand what it’s supposed to be doing. To accomplish this, we need samples of our writing – and more is better. Ideally, we have samples of our writing in the context we want to use AI for. How we write an email is different than how we write a book, or how we write a LinkedIn post, or how we caption an image on Instagram.

More is better. A lot more. How much more? AI is going to struggle capturing your style from just a handful of blog posts, in the same way that a ghostwriter would struggle to capture it. If you had 20? That’s better. A year’s worth? Even better. When I build prompts for writing style, a year’s worth of content is good, two years is better.

That’s the bare ingredients. Second, we need a language model that can hold that much information in memory. A year’s worth of content might be a couple hundred thousand words, depending on how prolific you are. A model like GPT-4-Omni, the model that powers ChatGPT, can only remember about 90,000 words or so. If you have more than that, you need to move to a larger model. The model I use for this sort of work is Google’s Gemini 1.5, which can remember about 1.5 million words in a conversation. Use the model that best fits the amount of data you have to work with.

With an appropriate model and a corpus of our own data, we’re ready to get started.

Step 1. Ask the Model What It Knows

We’re going to follow the Trust Insights PARE AI Framework for this process, and the first step is to prime the mode. We ask the model what it knows about writing style components, because every model is different. Here’s an example prompt:

Today, we’re going to analyze the writing style of an author, (your name here). What are the major components of a writing style (such as diction, intertextuality, etc.) for an author in the (genre)?

Here’s what it would look like for me:

Today, we’re going to analyze the writing style of an author, Christopher Penn. What are the major components of a writing style (such as diction, intertextuality, etc.) for an author in the nonfiction business book genre?

What we’re doing is invoking the model’s knowledge of writing style from a specific, technical perspective. This is one of the reasons many prompts fail; you need at least a little domain expertise to know how to word your prompts with the specifics a model needs to know. We’ll want to augment it with the other questions in the PARE framework, like:

- What are common mistakes made when analyzing writing style components?

- What aspects of writing style components haven’t we talked about?

Once the model has returned all the major knowledge it has about writing style, ask it to outline all the best practices for analyzing writing style in an outline. This helps reinforce the things we want to focus on.

Step 2: Build an Analysis

Now that we’ve got an outline of what writing style even is, we’re ready to move onto the next part, which is analyzing our writing. Here’s an example prompt:

Using the best practices for analyzing a writing style’s components as outlined above, analyze the writing style of (your name here) based on these uploaded documents. Provide a detailed, comprehensive analysis in outline format.

Then upload the text files of your writing. Remember, more is better and more task-specific is better.

Let the language model produce its detailed analysis of your writing style based on the components it analyzed. We’ve now got some very nice details about our specific writing style, broken out by component. This is critical; remember that writing style is an umbrella term. By getting far more granular with each of the components, we’ll be able to give the model better directions.

We’ll also want to have a page or two of examples in a separate document, perhaps a signature piece of content you’ve written. We’ll come back to this part later. Having a piece of signature content is important because it shows the frequency of our writing style components, something that is almost never captured correctly in an analysis of our writing style.

Step 3: Build a Scorecard

As we covered on this past week’s Trust Insights livestream, one of the ways to force a model to do a better job is to force it to self-evaluate. The best way to do this is to have it analyze and score its own work, but to do that, we need to give it a framework for doing so, a scorecard. The magic word here that invokes the right form of analysis is rubric. A scoring rubric reliably helps a model understand what we’re doing.

Here’s an example prompt.

Using the analysis of (your name here)’s writing style components, build a scoring rubric to assess how well a piece of content aligns to the writing style of (your name here). The scoring rubric should use variable integers (0-3, 0-5, 0-7) to score each writing style component, and the scoring rubric should total 100 points.

The model should return a scorecard that we will use to evaluate whether a piece of writing aligns to our writing style or not.

We should now have four documents: a list of writing style best practices, an analysis of our individual writing style, component by component, a page of signature content, and a scorecard to measure content to see how well it aligns with our writing style.

Step 4: Generate Content

We’re now ready to put these pieces into action. Let’s give the model some instructions and a starting topic to build some content in our writing style. This point is where you could start a new chat; all the work we’ve done up to this point is preparatory work that you only have to do every so often, as long as you saved the documents generated in the previous steps.

Here’s an example prompt. Note that we are still using the Trust Insights RACE AI Framework to guide the model.

You’re an expert in (roles). Your task is to (action). Some background information: (context). Using the writing style of (your name here) as provided, write the blog post about (topic) in the writing style of (your name here). Write 800 words in three sections: what the concept is, why the concept is important, and how to implement the concept. Use the writing style of (your name here) strictly; reference the (your name here signature content) to understand how the writing style should read.

Here’s what it would look like if I did this:

You’re an expert in marketing analytics and marketing attribution. You know Google Analytics 4, Google Tag Manager, data layers, attribution analysis, multi-touch attribution, uplift modeling, and marketing mix modeling. Your task is to write a blog post about the importance of uplift modeling to measure the effects of offline marketing activities. Background information: uplift modeling is a useful way to determine the impact of an activity outside a clickstream and digital analytics, by declaring which time periods in a measurement set have the activity question. Using techniques such as propensity score matching where the control and test units are individual days, we can measure the impact of a tactic while discounting data that’s “business as usual”. Using the writing style of Christopher Penn as provided, write the blog post about uplift modeling in the writing style of Christopher Penn. Write 800 words in three sections: what the concept is, why the concept is important, and how to implement the concept. Use the writing style of Christopher Penn strictly; reference the Christopher Penn Signature Content to understand how the writing style should read.

You’ll add the Signature Content and the Writing Style Analysis documents along with the prompt.

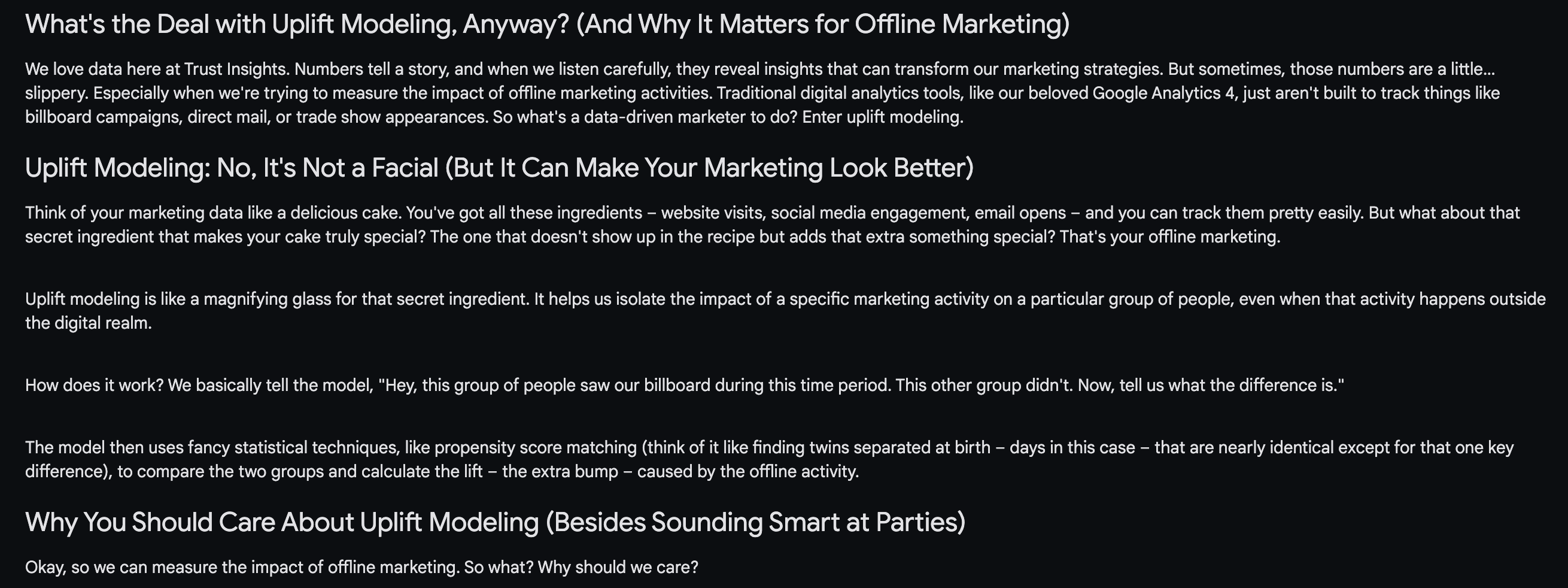

What does the model produce? A really nice blog post:

Now, we’re not done. We’ve only done the generation. We need to know how well the model did.

Step 5: Score the Content

Like an intern or a student, we need to give feedback to an AI model about how well it did on a task. The most precise way to do this is the same as we do with schoolwork: we give it a grade. The nice thing about AI that isn’t true of schoolchildren is that AI is capable of grading its own work. We’ll use a prompt like this:

Using the (your name here) Writing Style Scoring Rubric, analyze and score the blog post you wrote according to the rubric. Return your analysis and a score of your blog post.

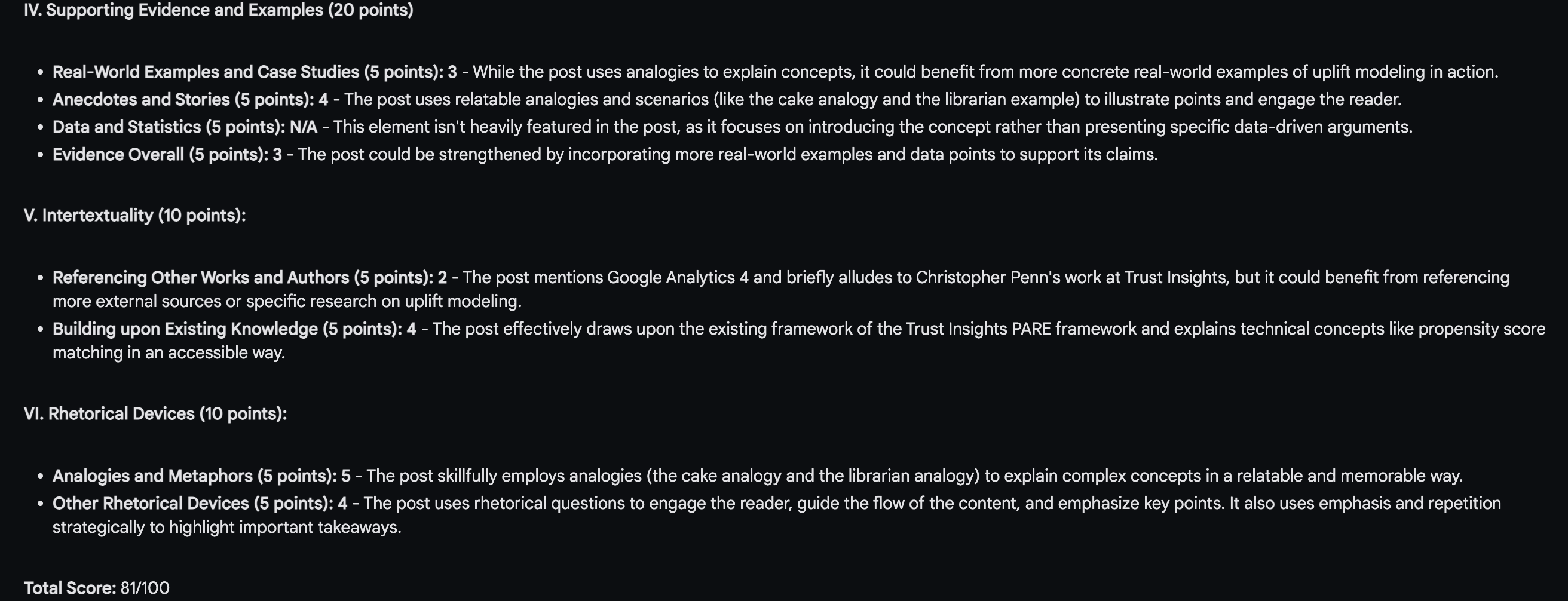

The model will return its outline of what it did well, what it did less well, and how it scored:

Not bad, right? We’re not done. This is only the first draft.

Step 6: Refine the Content

Now that we have a grade, we want to give our AI model a chance to improve its grade. Here’s an example prompt:

Using the score and analysis of your blog post and the (your name here) Writing Style Scoring Rubric, create a refinement of the blog post that improves on the score.

Let the model do its work to create a revision. Once it does so, ask it to score its revision:

Using the (your name here) Writing Style Scoring Rubric, analyze and score your revision.

What you should get is a score that helps you understand what the model changed and improved to achieve a higher score:

And let’s take a look at the final output. How well does this match me?

Not bad, right?

Part 4: Scaling Your Writing Style

You’ll notice that this process is incredibly thorough. It requires a lot of effort the first time around, though you can see after step 4 that it gets more efficient with subsequent uses. However, there’s still a lot of manual work.

What if you wanted to do this at scale? That’s a topic for a different newsletter, but the short version is that tools like Google Gemini have APIs. The APIs can take things like prompts, documents, etc. And programmatically run and repeat the process from Step 4 onwards as many times as you want – with the caveat that each use of the API costs you money above and beyond what you already pay for generative AI.

But if you had a need to drastically scale you, the process is something that can be automated and still do a very good job.

While that might not be something you want to do with your personal writing, you could see how useful that would be for deploying with a corporate style guide, for example. Suppose you’ve had a series of bloggers over the years at your company and all your content is a wild mashup of styles. With code, an API, a credit card with a decently large limit, and the pieces we’ve generated here, you could have a language model rewrite every piece of content to conform to your current style guide.

Shameless plug: if you want help doing that, that’s something that Trust Insights can do for you.

Part 5: Wrapping Up

I’m impressed that you made it all the way to the end. Congratulations. And I’m even more impressed that you tried each of the steps in the process and saw how they work. Perhaps you even have the necessary pieces to do this on your own now, and if so, I’m glad it was helpful.

The reason AI can’t easily mimic writing style without the extensive set of processes we walked through today is because writing style is such a complex beast. There are tangible aspects an AI model needs to know to create. There are intangible aspects that a model needs to know to mimic. And like all writers, the first draft is rarely the best draft. By forcing the model to self-evaluate and then improve, we force it to become a better writer.

Is it an exact match of how you write? No. It never will be. So much of your writing, as we talked about with diction, is rooted in your emotional knowledge, something the machines don’t have access to. But will it generate a piece that’s really good? If you follow the process, the answer is yes.

How Was This Issue?

Rate this week’s newsletter issue with a single click. Your feedback over time helps me figure out what content to create for you.

Share With a Friend or Colleague

If you enjoy this newsletter and want to share it with a friend/colleague, please do. Send this URL to your friend/colleague:

https://www.christopherspenn.com/newsletter

For enrolled subscribers on Substack, there are referral rewards if you refer 100, 200, or 300 other readers. Visit the Leaderboard here.

Advertisement: Bring Me In To Speak At Your Event

Elevate your next conference or corporate retreat with a customized keynote on the practical applications of AI. I deliver fresh insights tailored to your audience’s industry and challenges, equipping your attendees with actionable resources and real-world knowledge to navigate the evolving AI landscape.

If you’d like to see more, here are:

Leave a Reply