Almost Timely News, 23 January 2022: Newsletter Format, Mind Readings :: View in Browser

Get my 12 Days of Data eBook totally free, no forms to fill out »

What’s On My Mind: Newsletter Format

I was wondering something… this week, as you remember, I published our quarterly ad as a video, and hundreds of you viewed it (thank you!). That got me thinking… would you also like this newsletter in multiple formats?

Let’s take a quick poll. Just click on your preferred format below – and you can click more than one. There’s no form to fill out or anything, this is literally the voting process.

- Click/tap here to vote for this newsletter in text format »

- Click/tap here to vote for this newsletter in audio format »

- Click/tap here to vote for this newsletter in video format »

I’m curious to see what your answers are.

A little behind the scenes too: what I’ve done to make a super simple poll is send every option to the same landing page, but each option has a unique query parameter (that thing after the question mark in the URL):

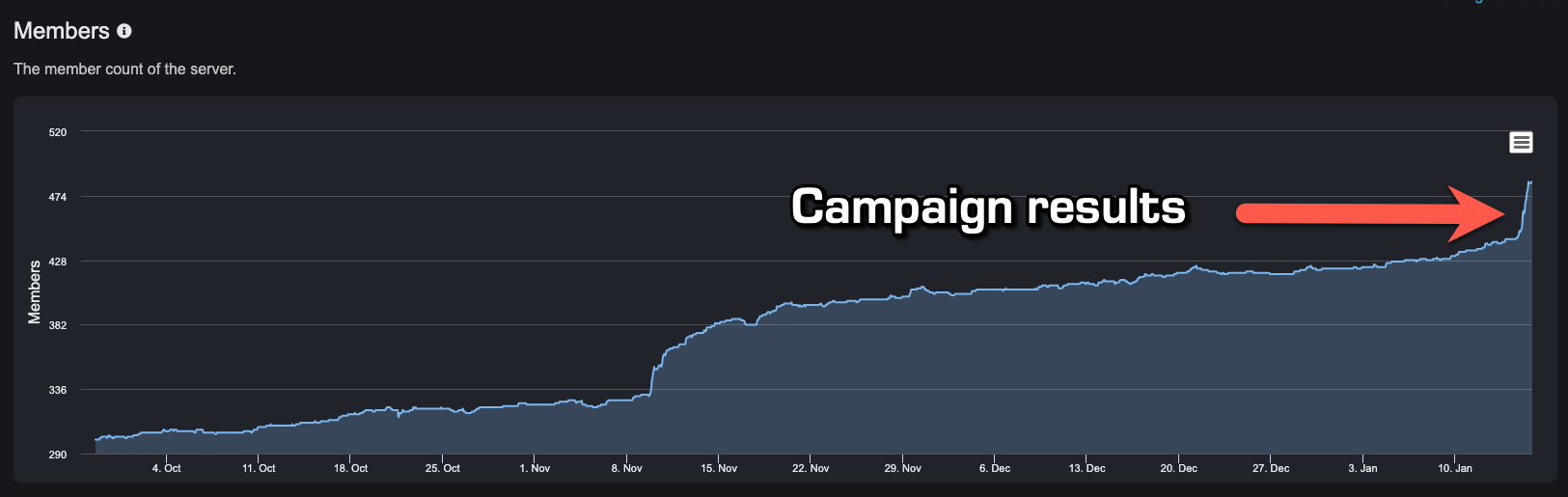

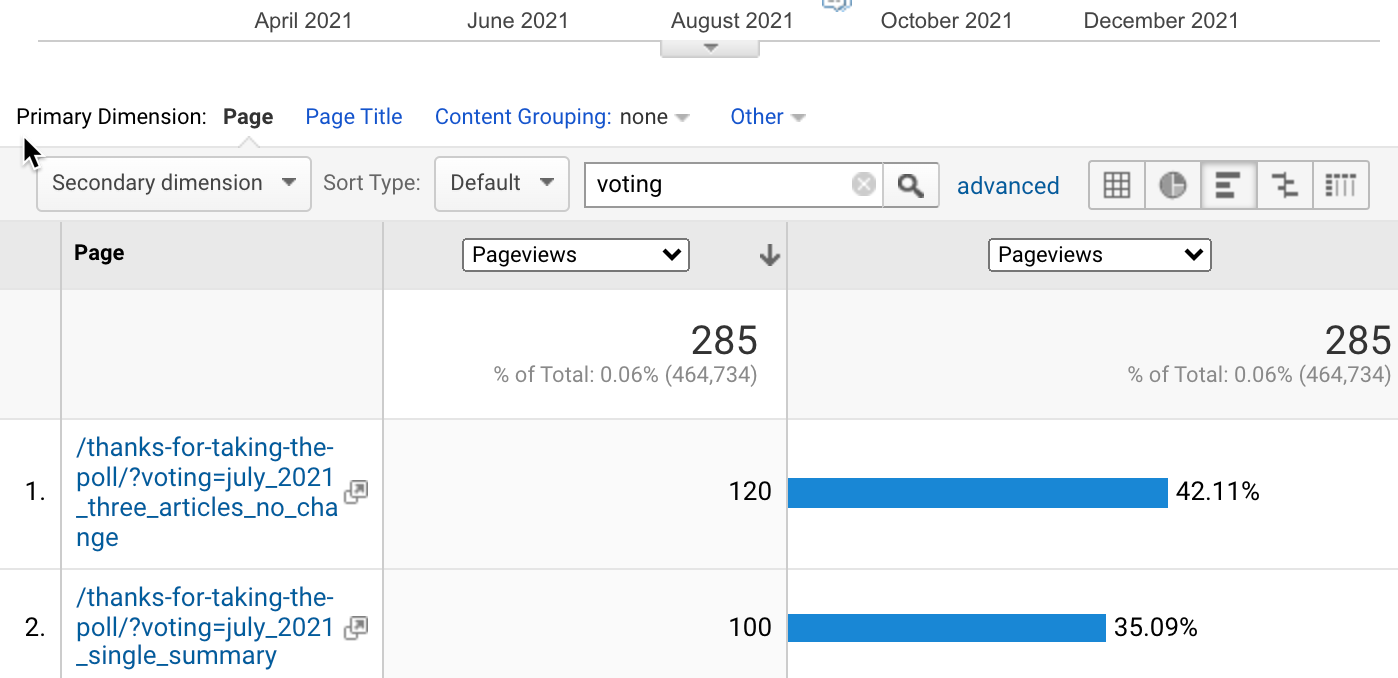

Once you arrive on my website, Google Analytics reads the URL, ingests its tags (UTM tracking codes), and then records the rest of the information. That means anything I put on the URL also gets recorded. Here’s an example from last year:

You can see the results of the poll right in Google Analytics, which keeps things very easy and straightforward.

Why wouldn’t I just rely on the click tracking in my email marketing software? Because we know that open and click tracking in email marketing are a little squishy. I can still do that tracking there, but Google Analytics filters out most bots and spiders if you’ve turned that setting on. That means that the data I get in Google Analytics should be a little more reliable and human.

Why is email marketing click tracking data squishy? Some folks have mail systems and servers that pre-fetch mail. Apple’s Mail Privacy Protection does this, which badly skews open rates. In businesses, companies that use high-end firewalls and other cybersecurity protections often have servers that will open and click on every link in an incoming email to check it for malware and viruses. That means, as an email marketer, you may see higher click-through rates than are actually real.

So that’s why I use this particular method for polls in my email newsletters. It’s the least amount of work for you and my best effort at reliable data for me. Feel free to use this technique in your own email marketing!

Share With a Friend or Colleague

If you enjoy this newsletter and want to share it with a friend/colleague, please do. Send this URL to your friend/colleague:

https://www.christopherspenn.com/newsletter

ICYMI: In Case You Missed it

This week, I debuted a new series called Mind Readings. These are topics I had some thoughts about that I wanted to share with you, but weren’t questions anyone asked. They’ll be interspersed with the questions you send me.

- Mind Readings: The Hidden Danger of the Social Media Highlight Reel

- Mind Readings: The Language of Our Goals

- Mind Readings: Effective Writing Techniques for Marketing

- You Ask, I Answer: Analytics That Impact Conversions

- You Ask, I Answer: Email Marketing and Google Analytics Tracking

- {PODCAST} In-Ear Insights: Affiliate Marketing Overview

Skill Up With Free Classes

These are just a few of the free classes I have available over at the Trust Insights website that you can take.

- Fundamentals of Marketing Analytics (new!)

- How to Think About Google Analytics 4 (new!)

- Proving Social Media ROI

- Paradise by the Analytics Dashboard Light: How to Create Impactful Dashboards and Reports

- How to Deliver More Value as an Agency

Get Back to Work!

Folks who post jobs in the free Analytics for Marketers Slack community may have those jobs shared here, too. If you’re looking for work, check out these open positions.

- Senior Product Marketing Manager at PTC

- Paid Search Manager at Zoro

- Senior Marketing Analyst at Zoro

- Senior Account Executive at PipeCandy

- Paid Media Specialist at Hart and Highland

- CMO at Royer Thompson

- Digital Marketing Specialist at Duarte

- Rev-Ops Business Systems Analyst at Onshape

Advertisement: AI For Marketers, Third Edition

With every software vendor and services provider proclaiming that they too are an AI-powered company, it’s more difficult to demystify artificial intelligence and its applications for marketers. What is AI? Why should you care? How does it apply to your business?

In the revised Third Edition of AI for Marketers, you’ll get the answers you’ve been looking for. With all-new practical examples, you’ll learn about attribution modeling, forecasting, natural language processing, and how to start your AI journey.

Get your copy now in the version that best suits you:

» AI for Marketers, Digital Edition comes in Kindle, ePub, and PDF formats »

» Ai for Marketers, Print Edition »

What I’m Reading: Your Stuff

Let’s look at the most interesting content from around the web on topics you care about, some of which you might have even written.

Social Media Marketing

- TikTok parts ways with marketing chief behind Kitchens, Resumes and other ‘rogue’ campaigns via TechSpot

- 5 Social Media Predictions Marketers Got Wrong Last Year

- LinkedIn Learning Offers Free Online Career Classes Until February 1

Media and Content

- Short-Form vs. Long-Form Content: Which Is Better for SEO?

- How to Measure Content Marketing ROI (Correctly)

- The Ultimate Quality and Content Performance Checklist

SEO, Google, and Paid Media

- Good SEO vs Great SEO: Experts Share 6 Key Differences

- The Ultimate Keyword Research Guide for SEO

- How to Use Google Search Console Insights for SEO Strategy via MarketingProfs

Advertisement: Supermetrics for Google Data Studio

Google Data Studio is an incredibly powerful tool for bringing your data into one place for analysis. Out of the box, it serves up Google ecosystem data that marketers need – Google Analytics, Google Search Console, YouTube, Google Ads.

But what about… Facebook data? LinkedIn data? SEO data? Data from your CRM or marketing automation software? That’s where Google Data Studio has some gaps.

Fill those gaps with the Supermetrics Integration for Google Data Studio with over 70 different supported integrations like most social networks, CallRail, Adobe Analytics, Bing Ads, Pinterest, Stripe, Semrush, Salesforce, and so many others. Get ALL your marketing data in one place for easy reporting.

Click here for a free trial of Supermetrics for Google Data Studio »

Tools, Machine Learning, and AI

- Why games may not be the best benchmark for AI via VentureBeat

- New startup shows how emotion-detecting AI is intrinsically problematic via VentureBeat

- Models Are Rarely Deployed: An Industry-wide Failure in Machine Learning Leadership via KDnuggets

Analytics, Stats, and Data Science

- INBOX INSIGHTS, January 19, 2022: Two Types of People, Solving Wordle Puzzles

- Roadmap to Master NLP in 2022 via Analytics Vidhya

- Layers of the Data Platform Architecture via Analytics Vidhya

Ad: Make Better Videos with Techsmith Camtasia

If you enjoy my videos, like You Ask, I Answer, Do Something With Your Marketing, Saturday Night Data Party, and many others, then consider using the platform I use to edit and publish them: Techsmith Camtasia. Camtasia is just the right balance between too easy and inflexible, like iMovie, and absurdly complex and expensive, like Adobe Premiere. It’s got just the right features, from subtitle editing to all the usual transitions and special effects, and it’s a desktop app, so there’s none of this crazy trying to “edit in the cloud” (which is insane for video production). If you need to produce videos, screencasts, and even animations, give Camtasia a try.

How to Stay in Touch

Let’s make sure we’re connected in the places it suits you best. Here’s where you can find different content:

- My blog – daily videos, blog posts, and podcast episodes

- My YouTube channel – daily videos, conference talks, and all things video

- My company, Trust Insights – marketing analytics help

- My podcast, Marketing over Coffee – weekly episodes of what’s worth noting in marketing

- My second podcast, In-Ear Insights – the Trust Insights weekly podcast focused on data and analytics

- On Twitter – multiple daily updates of marketing news

- On LinkedIn – daily videos and news

- On Instagram – personal photos and travels

- My free Slack discussion forum, Analytics for Marketers – open conversations about marketing and analytics

Required Disclosures

Events with links have purchased sponsorships in this newsletter and as a result, I receive direct financial compensation for promoting them.

Advertisements in this newsletter have paid to be promoted, and as a result, I receive direct financial compensation for promoting them.

My company, Trust Insights, maintains business partnerships with companies including, but not limited to, IBM, Cisco Systems, Amazon, Talkwalker, MarketingProfs, MarketMuse, Agorapulse, Hubspot, Informa, Demandbase, The Marketing AI Institute, and others. While links shared from partners are not explicit endorsements, nor do they directly financially benefit Trust Insights, a commercial relationship exists for which Trust Insights may receive indirect financial benefit, and thus I may receive indirect financial benefit from them as well.

Thank You!

Thanks for subscribing and reading this far. I appreciate it. As always, thank you for your support, your attention, and your kindness.

See you next week,