At this year’s IBM THINK, I had the pleasure of joining fellow IBM Champions Jason Juliano, Steven Perva, and Craig Mullins along with IBMs Luke Schantz, JJ Asghar, Elizabeth Joseph, and the legendary Gabriela de Quieroz for a wide-ranging discussion on AI, data, bias, quantum computing, genomics, and more. Give a watch/listen!

Can’t see anything? Watch it on YouTube here.

- Got a question for You Ask, I’ll Answer? Submit it here!

- Subscribe to my weekly newsletter for more useful marketing tips.

- Find older episodes of You Ask, I Answer on my YouTube channel.

- Need help with your company’s data and analytics? Let me know!

- Join my free Slack group for marketers interested in analytics!

Machine-Generated Transcript

What follows is an AI-generated transcript. The transcript may contain errors and is not a substitute for watching the video.

Luke Schantz 1:23

Hello, and welcome to the champions roundtable at think broadcast day one.

I’m your host Luke Schantz.

And I’m joined by my co host, JJ, Asghar.

Hello, JJ.

JJ Asghar 1:34

How we work.

It’s been a little while since we’ve talked.

But let me go introduce myself real quick.

Hi, JJ.

I’m a developer advocate for IBM Cloud.

And I here.

We’re here to talk about some cool things from IBM.

Luke Schantz 1:50

Yeah, well, JJ and I were hanging out earlier today watching the live stream of the think broadcast.

And we had a good time we had to, we were able to chat and go over all the content that was revealed today.

And now I think JJ is going to welcome some of our guests JJ, what do you welcome the IBM errs that are gonna join us today?

JJ Asghar 2:08

Yeah.

Hey, so wiz Josephson is a good friend of mine or Elizabeth, Justin will see her pop in here in a second, hopefully.

And then, Gabriella, who is when I assume a lot here, when I hear AI and data, I just assumed Gabrielle is part of the conversation.

So this is going to be amazing.

How are y’all doing? Whiz? Tell us about yourself?

Elizabeth Joseph 2:32

Great, thank you.

So I too, am a Developer Advocate.

But I’m working over on the IBM z Systems side.

So we had a big launch recently with the IBM z 16.

New mainframe version coming out.

But my background actually is more in open source and distributed systems.

So before I joined IBM, three years ago, I spent about 15 years doing Linux systems administration.

So it was really fun watching the broadcast this morning, because I got to like, you know, dive into a bunch of little things here and there.

So yeah, that was cool.

And I’m happy to be here.

JJ Asghar 3:05

Awesome.

Thank thanks for that.

Gabrielle.

AI equals Gabrielle, is that right? Am I Am I right? Can you tell me a little bit about yourself, please?

Gabriela de Queiroz 3:15

Yeah, absolutely.

And thank you for having me here.

My name is Gabriella de Queiroz.

I’m a chief data scientist working on AI strategy and innovation here at I IBM.

But I’m also like working on open source I’ve been working for with open source for several years, and the data and AI space.

Not only like contributing to open source, but also like consuming the open source technology that we have about the world.

JJ Asghar 3:43

Awesome.

Thanks for that.

Hey, Luke, I got a question for you, buddy.

For audience, how do you ask questions? We’ve got some awesome people on this.

And we’re breathless.

Morin, but how do they how do they ask questions?

Luke Schantz 3:56

That is a great question about questions.

So wherever you’re watching this, if there is a chat function there with your streaming platform, you can just drop those chats right in those questions right into that chat.

And we’re going to be monitoring those and filtering them into this, this stream.

So you know, probably towards the end of the stream, we’ll get to those questions.

But if you’ve got these, please drop them in there.

And if there’s any questions that we didn’t get to, there will be an Ask Me Anything that you can find on community.ibm.com.

After this stream, it’ll be a chat based so we got you covered.

We’re gonna try to get your questions in here.

But if we can’t, we got you covered after the stream, we’ll be able to answer your questions.

JJ Asghar 4:38

Rock on.

So who else we’re bringing in Luke?

Luke Schantz 4:43

Well, our next guests are part of the IBM champions program.

And for those of you are not familiar with the IBM champions, they are experts and thought leaders around IBM products, offerings and technologies.

They’re a diverse and global group who love to share their knowledge and expertise.

You’ll find them answering questions, creating content, running user groups, putting on events and helping others in the community.

So let’s meet the IBM champions that are gonna be on our show today.

Luke Schantz 5:13

Here they come.

They’re on there.

They’re coming.

It’s a little bit of a walk there.

They’ll be here soon.

Great.

Hello, Jason.

Hello, Steven.

Hello, Craig.

Hello, Chris.

So maybe we could just go around and have everyone introduce themselves.

Why don’t we start with Jason Juliano, Director at EisnerAmper Digital.

Jason Juliano 5:37

Yeah.

Hey, good afternoon, everyone if you guys are in the east coast, but so I’m Jason Juliano, I lead up digital transformation for EisnerAmper.

I’ve been IBM Champion now for the last four years and data and AI, blockchain and cloud.

And, yeah, thank you for having me be here.

Luke Schantz 6:03

We appreciate you taking the time.

Steven Perva.

Please, please introduce yourself to our audience.

Steven Perva 6:09

Absolutely.

Thank you, Luke.

I’m grateful to be here.

As mentioned, my name is Steven Perva.

Unlike Jason, I have only been a champion for now just 2022 for IBM z Systems.

I’m new to this new to this game, but by my professional life, if you will, is is I am the senior mainframe innovation engineer at a company called insulin alemayehu service provider based out of Chicago and globally.

Luke Schantz 6:36

Excellent.

Thank you, Steven.

Craig Mullins, Principal Consultant and President at Mullins consulting.

Hello, welcome to the stream.

Craig Mullins 6:43

Hello, thank you.

Happy to be here.

As you said, I’m an independent consultant.

Been an IBM Champion, since back when it was called information champion.

So 14 years now, also an IBM gold consultant, which is conferred by IBM on to what they consider the elite consultants, work with database systems, all my career DB two, on the mainframe since version one, so you can see the gray in my hair.

I’ve earned it.

Luke Schantz 7:14

Thank you, Craig.

I’m looking forward to hearing what you have to say.

Chris Penn, chief data scientist at trust insights.ai Hello.

Welcome to the stream, Chris.

Christopher Penn 7:25

Thank you for having me.

Yeah.

We’re an IBM Business Partner as well.

We’re analytics in a management consulting firm, based mostly in Massachusetts.

And I’ve been an IBM Champion now for five years, spent a lot of time being a sponsor user, which is IBM is program where they bring in folks like all the folks here, and we give feedback on early prototypes, ideas, proposed refinements and things.

I will just say also Gabriela undersold herself.

She’s a member of the R Foundation, which I’m an avid R fan.

And she for the last 10 years has been heading up one of the largest art data science groups for women on the planet.

So she, she needs some additional props.

They’re

JJ Asghar 8:05

at a rock star got a rock star?

Gabriela de Queiroz 8:07

I mean, you hit a very humble, I have to say so.

Yeah.

JJ Asghar 8:14

Excellent.

So yeah, go ahead.

No, no, you go, you’re speaking

Luke Schantz 8:19

first.

I was I was actually going to ask you.

I was like, we have so much to talk about.

We have an hour and a half.

Where do we want to start the conversation? I feel like

Christopher Penn 8:28

what do you think, JJ? Oh, well, I

JJ Asghar 8:30

think we should just start right out of the gate.

And let’s go around the room real quick.

And let’s kind of seat like, we all.

First of all, we very, we confirmed we all did watch it, we all engaged in it.

So we, you know, this is live and we’re not going to pull any punches.

But we all did really did come out something with watching the stream this morning.

So have a look around the room start with the ways with what was the one thing that like just grabbed you about I don’t we’re not gonna go into detail.

But what was the thing that you were just like? Yes, that is exactly what I was hoping to see or hear or excited you about the presentation?

Elizabeth Joseph 9:09

Oh, well, for me for sure.

I mean, the the broadcast this morning was a little over an hour and a half and a full 30 minutes of that was spent on sustainability.

And that was really exciting for me to see.

It’s something I care a lot about.

JJ Asghar 9:21

Awesome.

Yeah, we’ll definitely go into that that.

That’s great.

That’s great.

Gabrielle, what about you,

Gabriela de Queiroz 9:29

for me was when Arvin was talking about successful leadership and transformation.

So he touched on several pieces and pillars, that we are going more into details later, but this is the takeaways that I’ve been thinking about, and it’s something that we all should discuss and talk more.

JJ Asghar 9:52

Perfect, perfect, perfect.

Chris, how about you, buddy?

Christopher Penn 9:56

I have two things that were really interesting.

One was use of blocks came to provide transparency up through your supply chain, that was really important given how so many things like sustainability initiatives are based on knowing what’s happening throughout the value chain.

And the other one I was really happy to hear about was the Citizens Bank talk where folks talking about the future of cryptocurrencies and stuff and you know, the, how it’s going to be made boring, which is a good thing, because once something is no longer technologically interesting, and becomes societally useful, and, you know, hearing that we’re going to get away from the wild west and you know, random cartoons of apes being sold for $30 million dollars into here’s an actual use for the technology that might benefit people and could potentially justify the enormous energy usage of platforms.

JJ Asghar 10:48

Well, you know, there’s a lot of jokes, I can go from there, but we’re not we’re not gonna go down path.

We’re gonna go over to Craig, what grabbed you? What What was interesting to you?

Craig Mullins 10:57

Yeah, I think the main thing for me was that imbued in everything that was being discussed was data.

And even you know, you look at the sustainability conversation.

And they asked, you know, where do you start? And the answer was always start with data.

And I think that’s a good answer.

There’s a lot underneath that that really needs to be discussed.

And the one thing I always hear is, you go into an organization and they say, we treat data as a corporate asset, then you look at how they treat data, and they’re just filthy liars.

So I think there’s a lot to discuss there.

JJ Asghar 11:36

Awesome.

Steve, how about you?

Steven Perva 11:38

Yeah, so for me, I want to talk a lot about modernization, I feel like monetization was was a topic that kind of was a facet of almost everything that people were speaking about, for me, especially working in the mainframe space, that’s kind of my weapon of choice, I find that that modernization is, is just a piece that, that flows across the whole thing.

The culture aspect of modernization is really important to me, especially as somebody with with a with a few less gray hairs as a lot of my peers.

JJ Asghar 12:10

Awesome, awesome.

Jason, how about you? What was the thing that grabbed you about this view this morning?

Jason Juliano 12:18

Just a comment on that, Steve, real quick.

I remember when I was at bank in New York, we were modernizing across.

Yeah, the mainframe to the as 400 moving to AI series.

So mario is modernization has always been around.

But what resonated really well with me was, as Gabriela mentioned, our arvense talk on digital transformation, the culture change, how businesses need to adapt to, you know, AI, automation, sustainability.

And then you have sustainability being very integrated into everyone’s ESG plans, especially this year.

Excellent work.

Luke Schantz 13:05

Oh, is it my turn? Is it my shirt? Oh, wow.

JJ Asghar 13:08

Oh, sure.

Yeah, let’s do it.

Luke Schantz 13:10

I didn’t realize I got to.

Well, I would have to concur that it was very interesting that sustainability was such a big part of the message.

And I think that we all know that there’s, you know, there’s lots of issues, and there’s lots of things we’ve been dealing with.

And I think what’s clearly this is important to society.

And I think it’s important, you know, we’d like to think that companies always want to do the right thing, but we know that they’re driven by constraints.

And I think we’ve reached, we have reached a breaking point, if we see such a large portion of such a high profile event, dealing with that topic, I think we can see that it’s important, you know, all the way through the end users and the consumers that are using whatever app they want to, you know, they want to have a ensure that the future is going to be there and that we can have sustainability.

And I think that that has trickled back and it’s really starting to penetrate the bones of, you know, the established organizations like IBM, so I was super excited about that as well.

Jason Juliano 14:09

Just tying back to that, you know, having like, if you’re looking leveraging technologies, right, so even with the blockchain, you know, creating these ESG, Blockchain environments where, you know, you could actually, you know, track product carbon footprints across the supply chain, and, you know, doing like a deeper look into all your suppliers and scoring them and, you know, being 100% Transparent across that data flow.

Elizabeth Joseph 14:40

Yeah, and another thing that was mentioned that was really fascinating to me was that, apparently, plant genetics are more complicated than humans.

They said like the difference between two strings of corn could be as much as like between a human and a chimp.

And that was like, wow, that’s, that’s really something but one of the things that they’re doing as a result is they’re using like AI and machine learning to analyze the gene and find out the relationships.

So when they do their modifications for sustainable farming and the newer variants that they’re creating, like they they know what to tweak, because it’s not always obvious.

And that was I was blown away by that.

I was like that is an amazing use of these machine learning technology.

Christopher Penn 15:19

What one of the interesting things about that the keynote, I thought was interesting that was omitted, was there wasn’t actually a lot of talk about AI and machine learning as a an area of focus, right? It’s we’re now at the point where it’s baked into everything.

Is there just sort of implicit? Oh, yeah, there’s there’s a machine learning in here too.

Luke Schantz 15:40

Yeah, the hype cycle, I feel like everybody was really focused on those kinds of buzzwords in the beginning, and now we’re getting to this.

What do they call it? The

Craig Mullins 15:50

there’s like the productivity hacks.

Luke Schantz 15:53

That’s exactly what I was looking for.

Yeah, we’re getting to that plateau of productivity where it’s, it’s really starting to come into use, and it’s not just the buzzword that gets your attention.

It’s what you’re doing with it.

Steven Perva 16:04

Yeah, I’m not sure who it was, but said that once the technology starts to get boring, it becomes more pragmatic.

Right.

Clay Shirky? Yeah, I think that I think that that we see that in the management of systems space as well, right.

AI Ops is a piece of is, is a thing that’s kind of becoming more relevant today.

We’re, we’re, we’re monitoring systems, as they as they go on to see, are we compliant? That’s a good piece of the picture, are we on the verge of some major issue that is going to reduce availability, that’s something that that truly fascinates me.

And, and as this becomes normalized, like you were saying, we’re seeing it just become what people I’d like that people like to refer to as table stakes, right? It’s just just a part of the equation that’s always been there.

Much like, modernization, like chasing got me on.

JJ Asghar 16:51

So we have some of the smartest people in the industry on this, this call, right? Or this zoom right? Or whatever it is, whatever you want to call it this way we’re talking right now.

And I want to take a quick step back.

And I want to ask the whole AI ecosystem, like tactically speaking, how do we, how do you engage in this world? Like, do you just just start it? Like, I’m gonna just do AI tomorrow? Or is there like a, how do you how do we, how do we build this into our narratives as just a? Will we engineer like myself?

Christopher Penn 17:27

Well, so.

So go ahead, Greg.

Craig Mullins 17:33

Okay, yeah, I wasn’t really going to say anything there.

But I think, you know, it’s not something where you can just sit down and do AI, you there’s a lot that you got to learn.

And I think you need to immerse yourself in the literature and understand what AI actually is.

When I look at some of the things that people tend to call AI, it gets that the marketer sees who AI is popular, something I’ve been doing for 30 years is now AI, and that’s not the case.

So digging in and figuring out what you have to do in terms of building a model on what is that creation of the model relying upon, and hey, it’s me.

So that’s data, right? And there’s a lot that you can do to be shoring up, what it is you have that you’re gonna put AI on top of, and you put AI on top of bad data, you’re gonna get bad decisions.

So work on cleaning up the quality of your data, work on understanding your data.

And you see things like data, fabric and data mesh being introduced, and people promoting them.

And I gotta say, if it’s got data in the title, I love it.

But what you’re actually calling it, you know, the fabric is this.

And the mesh is that it’s like, I don’t care.

It’s data management.

It’s all data management.

And you’re doing things like creating data quality, ensuring data stewardship, governing your data, ensuring compliance, cataloging your data, that’s what we call it.

Now.

We used to call it data dictionaries.

And after that, we call it repositories, and then we call it catalogs.

You know, you wait long enough, we’ll call it something else.

Let’s do it.

Instead of calling it different things over the 3040 years, I’ve been in this business.

So instead of rushing to say I’m doing AI, why don’t you start doing the things that build your infrastructure that make AI possible?

Christopher Penn 19:38

AI is fundamentally math, right? So when you if you take the word AI out and just call it spreadsheets, suddenly, like how do I start using spreadsheets like, oh, no, it’s a tool.

Right? So there’s four parts to all this, right.

There’s, there’s the tool, which is, you know, software models.

There’s ingredients, which is what Craig was talking about the data data is the ingredient right? Then there’s the part So no one talks about which is the chef, right? And the recipe.

And if you don’t have a chef and you don’t have a recipe, it doesn’t matter how much of AI you have, right? You can’t do anything.

If you have, like Craig said, you have bad data, you have bad ingredients.

I don’t care how good a chef you are, if you bought sand instead of flour.

You ain’t baking edible bread, right? So AI is just an appliance in the kitchen of productivity.

And you’ve got to figure out the other pieces you don’t have.

And that’s the part that people think it’s magic, right? No, you’re like, Yeah, you’re microwaves kind of magic to if you tried to take apart your microwave and put it back together, you’re probably not going to have a great day.

But it’s you don’t have to know how to reassemble a microwave to make use of it.

But you do have to know what it’s good for.

And oh, by the way, don’t put a potato wrapped in foil in the microwave.

Craig Mullins 20:54

After that, go to your marketing department and say let’s market math.

Gabriela de Queiroz 21:01

And, you know, yeah, I think like AI the hardest piece is like, the whole terminology like all this words.

And like you have to have a dictionary of like, the meaning of like all this new, the old war words and the new words.

And then you have like data, fabric mash, and then you have data lakes, and then you have like a bunch of like technology that someone that is not a into this world will get lost.

Right.

So the criminology is like, a big blocker.

I don’t I don’t think it’s like even the math, the statistics, but like the terminology itself, it’s very hard because you have to understand the terminology before being able to understand what is happening, right.

JJ Asghar 21:43

So, Gabriella, where do you like, where do you start? Like you said, you learn the vernacular.

Okay.

Okay, cool.

So where?

Gabriela de Queiroz 21:54

Well, I would say it depends, it all always depends on like, what I’m looking for, right? You can go as deep as you want, or as shallow as you want.

If you want to be able just surely read, like some news and be able to have some critical thinking around that.

You don’t need to go further into like, how is the technique being applied or like, what is neural network or like all the math behind, but it just needs to have like, a general understanding.

So it depends where you want to go and how far you want to go.

So that’s the first thing.

The second thing that I always mention is try to think about or get a use case that is related to an area that you are passionate about, for example, so like, I don’t know, if you like microwaves, vape, maybe see if there is any AI related to microwaves and go deeper to understand AI and microwaves, right? So it’s a domain that you like microwave, so you can go further and understand better

Jason Juliano 22:54

mentioned.

Yeah, I was gonna say already mentioned, the McDonald’s use case, right.

So transforming the way that we will order food today.

And I love, you know, telling the story through use cases.

And that’s a perfect story where, you know, we talk about AI technologies and automation.

Sorry, let’s go ahead.

Elizabeth Joseph 23:17

Oh, no, I was just because I think also, as technologists, we bring an interesting perspective to this to our organizations where they may not be aware of the tools available to them.

Because, you know, someone mentioned spreadsheets.

And I know, we’ve all been in a situation where we find out that someone in our organization is using totally the wrong tool to manage their data.

And sometimes it’s really horrifying.

And so I think I think that’s something we can also bring to the table in our organization, say, like, listen, there are ways to do this.

And you don’t have to understand the underlying technology, but I can help you with this.

And you know, that’s really something that really empowers developers.

And by speaking up in your organization, it also is very good for your career.

Christopher Penn 23:58

Yeah, look, yeah, you’re trying to make soup but you’ve got a frying pan.

JJ Asghar 24:03

I mean, you can it’s not going to be

Luke Schantz 24:11

on the topic of you know, when is it AI it makes me think to this idea of, you know, we’re talking about you know, these base levels you got MAVs you got some logic, and I think, you know, at some point, you know, like, let’s say even just in an application, like when is it? When is it function? When is it a script? And when does it become an application? I feel like there’s there’s an emergent property here that like, after you’ve done enough work that you can sort of, like get some semblance of, you know, functionality without having to do the work in the moment at that point.

It’s AI and I don’t know when that is, but it seems to me that there’s, it’s, it’s the same stuff, it’s just you need a bunch of it and the right the right pieces fitting together.

Christopher Penn 24:52

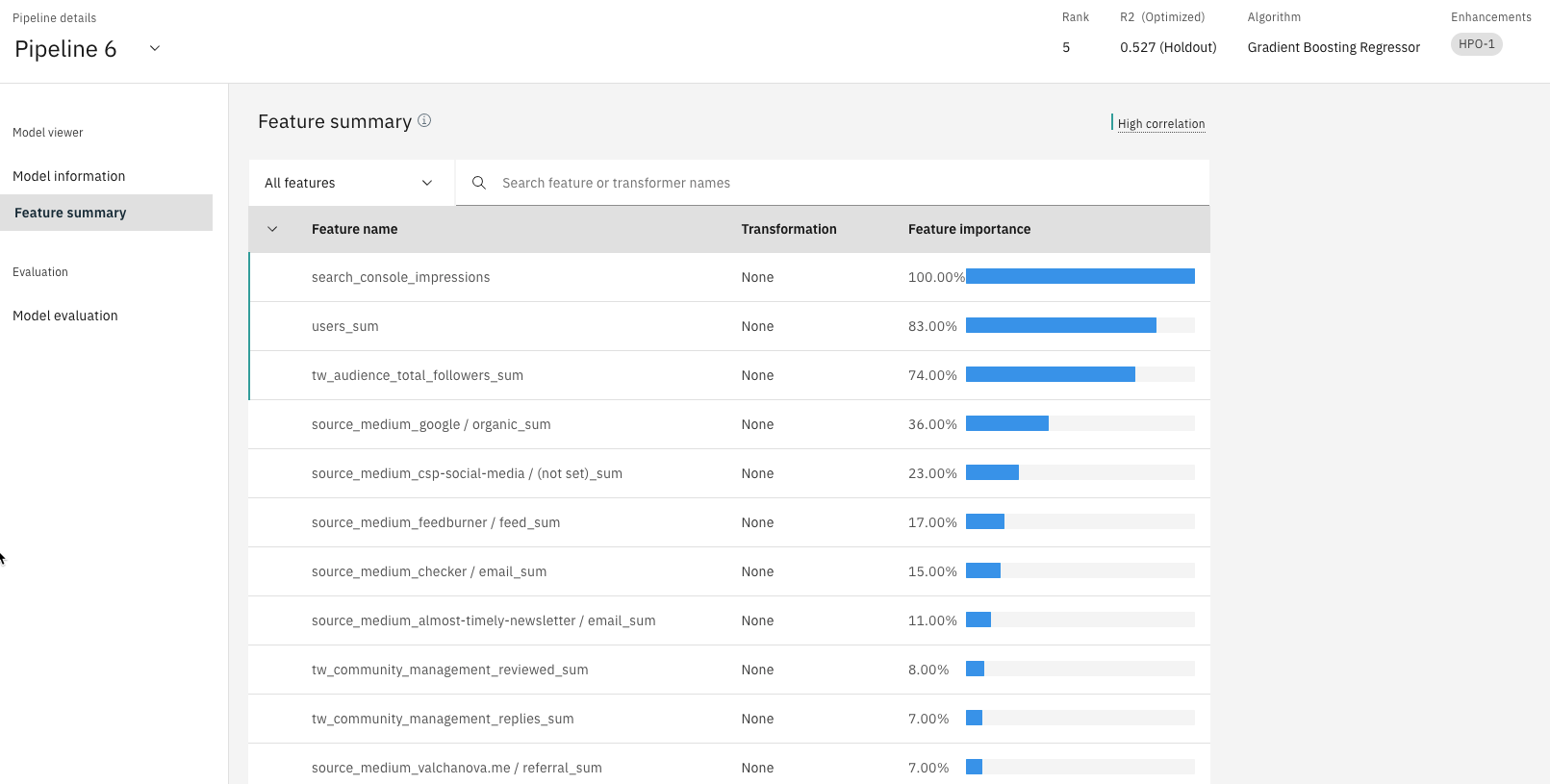

Typically, we’re saying something like AI once software begins to write itself like once you’re once you’re feeding data and to Having the software, you start creating something from that, as opposed to explicitly giving it instructions like, you can specify, yeah, here’s a list of five algorithms you can use, you know, you pick the best one, IBM Watson auto AI, does a lot of that you say, here’s the outcome I want, here’s my data.

You figure out the rest.

And fundamentally for machine learning, it’s, if the software isn’t learning, if there’s no learning involved, then it’s that it definitely is not AI.

Right? Once learning starts getting involved, then then you’re you’re into AI territory, and then you get into deep learning reinforcement, all the branches.

But if there’s no learning, it’s probably not AI.

Steven Perva 25:41

Yeah, I think that was to what Craig had mentioned, in the management space, we see a lot of people code things like when this value is hit, take this action, right.

And a lot of people say that’s, that’s aiops.

But but really, there’s no learning happening there.

But when you say, here’s a, here’s a sampling of what our system looks like, over the past year, and now you derive what that threshold is, and what the action to take to to maybe sell self remediate the problem, then then that I believe is more AI than than any type of knee jerk reaction that you’ve predefined.

Craig Mullins 26:16

And, and that scares the pants off assists Adams, I’m going to turn this over to the computer, it’s going to make all the decisions

JJ Asghar 26:25

we make Craig.

Anyway, I gotta jump in and say, You didn’t put that nag iOS alert on a restart at the service when naggy was kicked.

It might have the only one pick up this joke.

Oh, no, oh, no.

Okay, well, it’s got it.

There you go.

Elizabeth Joseph 26:44

It’s also funny because like, I can see us in 10 years being, you know, like, like people taking this stuff for granted, the stuff that AI Ops is going to be suggesting to us, like, I don’t write system calls manually on my Linux box, right like that.

The computer just does that for me.

But there was a time when people were, you know, there was a time when people were flipping switches to make computers go.

So I think, you know, as time goes on, as well, like, the stuff that AI ops does, it’s just gonna be normal things that will trust the system at that point.

Craig Mullins 27:13

And when you look at something like the IBM z, which is probably the most instrumented system on the planet, in terms of the amount of data that it collects, just think of the opportunity that machine learning has when it’s placed on top of that wealth of data.

They’ve been collecting for these years, and maybe only looking at this much of it, because that’s what fit on the report for my DBAs.

JJ Asghar 27:41

So, so, so correct.

The follow up on the whole, you open more data conversations there.

With the talk this morning.

Yes, this morning.

What else? What else? Great, what else? interest you in? Like, where are we going with it? What announcement really, really helped open up your eyes to the future that we’re bringing to this world?

Craig Mullins 28:05

Well, you know, I think there’s a lot going on in the world of data.

And I don’t necessarily think I heard any specific announcement in today’s session.

And I think there might be a bit of misfortune in that.

You know, in Arvind, he was talking about the IBM z 16.

Great, wonderful platform, where’s DB 213 That was announced the same day.

And that has built in AI built in things to improve performance data movement.

And data movement is one of my key issues.

And I say that because last year, I was doing some consulting at a large healthcare provider.

And they had lost several of their DBAs.

And they brought in some IBM gold consultants to help them sort of transition until they can hire some new ones on.

And the thing that struck me was the sheer amount of time that they spent moving data from one place to another.

This was a mainframe site.

They had about a dozen DBAs.

And they had about 16 different environments.

So there was production.

And then there were all these test environments.

And they would spend two to three days just about every week moving data from production to test.

And that was just the de B2C environment that were IMS DB is doing that Oracle DB is doing that.

And this was just the data that was moved from production into test.

When you look at what they were doing to build their data warehouses and how they were doing to aggregate that data across.

I would say they spent so many MSE us consumed just moving data from one place to another and not very efficiently.

That there’s a wealth of things that could be done for not just this organization, but just about every organization out there who’s doing similar things.

So you look at what we’re doing with data.

And it’s great.

And we’ve got more data.

We’re doing all these things with data.

But you can’t take your eyes off of everything going on behind the scenes that allows us to do that.

And that’s your database administration in your data movement.

And you know, just a cogs that keep that moving.

Christopher Penn 30:28

Correct.

Did you think it was weird? I remember hearing the data movement thing too.

Did you think it was weird that they didn’t mention anything about data velocity? Because it seems to me that it’s not just the volume of data, but it’s the speed of data that we’re getting, like anyone who’s done any work on any blockchain knows that.

When you reconnect your node, you have a data velocity problem as your machine tries to keep up with it.

And it strikes me that that neck didn’t get mentioned at all.

Craig Mullins 30:51

Yeah, I think it’s kind of my opinion is is probably pushed back against big data and, you know, talking about the V’s, and everybody’s been V to death, with volume and velocity, everything.

So now we may, we’re gonna try not to mention that.

But I think that’s unfortunate.

Because you’re absolutely right there.

That is a key issue that organizations are challenged with today.

Christopher Penn 31:17

We saw during the last election, we have so much data and so I got Jason.

Jason Juliano 31:22

And now I was gonna say what was mentioned today was from a quantum computing perspective, but 2025 They’re trying to push the quantum computing on the cloud at 4000 qubits, which I was reading a press release on it this morning.

It’s roughly 10,000 Ops per second.

So yeah, potentially, if that, if that’s true.

It’s going to take us to a new level with some of these use cases and, you know, some risk management algorithms.

So yeah, I’m, I’m personally excited about that piece.

JJ Asghar 32:03

I’m not excited the same time.

Come on, nothing, nothing, no, come on.

Craig Mullins 32:10

Well, they’re talking about quantum proof encryption, and the IBM z.

So you know, IBM is at least ahead of the curve, they’re, you know, they’re gonna give you the quantum computing to help you break down the encryption but they’re going to protect it least to the mainframe.

Jason Juliano 32:28

It’s gonna be an everyone else is supposed to get started now,

Craig Mullins 32:35

exactly.

Christopher Penn 32:39

I was a question that this is quite a good one for Gabriella.

Given what’s going on with quantum and, and the ability for machines to operate in states of grade.

Do you think that accelerates progress towards Artificial General Intelligence? By having quantum computing capabilities? Getting away from the restrictions of Betty? silicon has for AGI?

Gabriela de Queiroz 33:06

That’s, that’s a tough question, which I don’t know much where we are heading to in terms of like, it’s not my area of expertise.

But I feel like there is so much going on in the quantum space that it’s being hard to follow.

In a way Arvind, talked a little bit about this.

This morning.

We didn’t go into more details around quantum and all the advances.

But yeah, I don’t have much to say about quantum I just see like something that’s, it’s like, it’s going to be incredible.

IBM is in the front, with with all the technology, everything that’s going on.

And yeah.

Luke Schantz 33:50

I was gonna mention on the research.ibm.com blog, quantum dash development dash roadmap is the name of the post, but there’s a great post that kind of, you know, it’s more than we could get into here.

And I’m not sort of, I couldn’t explain it if I could read it and understand it, but I’m not going to be able to explain it.

But it’s amazing.

When you see Yeah, yeah.

And it’s following what we’re used to, right.

We, we work in these low level processes, and then we build better tools, and we build from there, and we build from there.

And that’s the same path we’re seeing with quantum where you’re gonna benefit from it without having to be an expert in it.

Elizabeth Joseph 34:27

Yeah, and one of the things that was interesting to me that I recently learned is that there are things that the quantum computer is really bad at.

And so there will always be a place for classic computing, and it will be a core component of all of this.

And I was thought that was really cool.

Like, Oh, good.

Quantum is, you know, it’s it’s a whole new world for us.

Craig Mullins 34:47

So we’re not going to be taking that JCL and moving into a quantum computer you’re

Unknown Speaker 34:51

probably not know,

Gabriela de Queiroz 34:53

which is interesting, because it’s something that we see in other fields as well like when we were talking about AI and the whole thing like, oh, is AI going to replace humans and everybody was like, Oh, am I going to get a job or have a job in 510 years.

And then now when we know that now they’re gonna go to replace this which be different.

We still need humans or like, even when you compare AI machine learning with the statistics people say, statistics is that, you know, you should not learn the statistics, you should not know, statistics.

And I’m like, oh, you know, statistics is the foundation for everything.

So like, so yeah, it’s very interesting, because like, you see things, you know, repeating in different domains and industries and topics.

Craig Mullins 35:37

Yeah, that’s a discussion that’s been around as long as automation, you know, every now and then when I talk about automating DBA features, people say, you can’t automate me out of a job.

And I think, well, you know, we’re gonna automate portions of you out of a job.

And that’s what our whole job as IT professionals has been automating portions of everybody’s job, right? We haven’t automated people out of existence yet.

We’re not going to anytime soon.

Steven Perva 36:05

That was a Go ahead.

JJ Asghar 36:08

Stephen, I was about to just say, Hey, you have some opinions here.

Steven Perva 36:11

Ya know, it’s, it’s just for me, it’s fascinating to see, to kind of riff off of what Craig was just talking about, I do a lot of process automation in my job using what we’re calling modern technology in terms of Python and no, Jas on running on Z.

Right.

And, and we’re doing that process automation.

And we’re trying what what I, the way I explain it, is we’re trying to automate the mundane, right, and we get that a lot of people are talking about, well, what’s going to happen to me if this is right, and I say, if your value is submitting JCL, then then you’re severely under estimating your own talents, and you need to focus on what you’re really good at, what we need to do is get you away from doing these menial things.

So you could do the fun thought work.

I guess something else I wanted to add, riffing off of what Gabrielle had mentioned, is, is when people say all the fear of AI, what’s it going to do to the world, something that Brian Young had mentioned right out the beginning, talking about talking about AI, was was how AI can bring a more equitable home buying process to people that was really fascinating to me to kind of, to kind of learn how we can automate things that make people kind of not as spectacular as we want to think we all write things like bias, you can automate the machine learning can get that, get that element out there, let’s not have any any bias because the machine is not bias to who we are as cultural or individuals.

So that was really fascinating, exciting to me to hear about that, especially the whole idea of being able to look at something without someone calling me on the phone or texting me or send me 55 emails to try to sell me something.

Hopefully the computers don’t pick up on that from us.

Elizabeth Joseph 37:53

Yeah.

Sorry.

During the the segment about translations, trying to translate a lot of the research papers and other things to other languages.

And so people do translations, and then the AI and machine learning, they go and check the translations.

So it was a nice little way that humans and computers were working together.

Because neither one is going to be perfect at that.

Craig Mullins 38:17

Yeah.

And you mentioned bias, Stephen, and you can automate bias out, but you can automate bias in

Christopher Penn 38:24

as well as automated in

Craig Mullins 38:27

Yeah, you look at facial recognition.

And you know, white male faces are easily recognized, but not much else.

And that’s because, you know, bias inherent in the data fed into the algorithm.

So, you know, if there’s bias in our society, there’ll probably be bias in our AI.

Jason Juliano 38:46

Yeah, yeah.

Ethics, ethic.

Yeah, ethics.

And bias is huge, you know, just training, you know, a lot of these AI and ML models from the beginning, especially, as you mentioned, Steven, dealing with mortgages and home lending.

It’s huge.

So we definitely have to prepare for that.

Christopher Penn 39:06

The challenge is, this AI is inherently biased, and it is inherently biased to the majority, because all the training data has to come from somewhere.

And if you want a lot of data to build a model, you have to bring in a lot of data.

And the majority is always going to be represented in a lot of data, because mathematically, it’s the majority.

So one of the things that people should be taking a really hard look at.

IBM has a toolkit called AI fairness 360, which can find on the IBM website, and it’s a set of libraries, you can use Python and R, that you feed your data and you declare your protected classes and then you say here are the things that we want to protect against, you know, if there’s a gender flag, you want it to be, you know, 4040 20 if you if there’s a race lag, it should be proportional.

But the challenge that we’re going to run into is how do you define fairness? So with mortgages for example, should the approval rate reflect the poppy Leyshon, should the approval rate reflect an idealized outcome? Should it be blinded, like with hiring should be blinded where everyone has an equal chance? Or are you trying to correct for an existing bias and all four are fair.

But they’re fair in different ways.

And nobody has come up with an explanation yet about how do we agree on what is fair, because just blinding applicants for a mortgage may cause issues.

And the other issue we have, which is a big problem with data and oh, by the way, we’re seeing those with social networks is imputed imputed variables, where I don’t need to know your race or your gender.

I just need to know the movies, music and books you consume.

And I can infer your gender and sexuality and age within 98% accuracy.

If you have an imputed variable in the data set like that, then guess what? You’ve rebuilt by us back into your dataset?

Gabriela de Queiroz 40:54

Yeah, so like, crazy.

You’re mentioning AI, F AI F 360s, open source tool that was created by IBM, and then we donated to the Linux Foundation.

And so now it’s under Linux Foundation.

So we donated a lot of like open source toolkits around this topic.

So AIF is one the AI X for explainability is another one.

We have art.

We have fact sheets in there is also the IBM product open scale that you can use in a more like production ready? Capacity, right?

Christopher Penn 41:29

Yeah, open skills really important because a drift, which is again, something that people don’t think about when it comes to the data has more data comes in, if you started with an unbiased model, but the data you’re bringing in is biased, your model drifts into a bias state by default, Microsoft found out that real hard way when they put up a twitter bot called Tay in 2016.

And it became a racist porn bot and 24 hours like, oh, well, that model didn’t work out so well.

But something like open skill does say these are protected classes.

And it’ll sounded a lot like your models drifting out of the protection classes you said didn’t want to violate.

JJ Asghar 42:12

At a twitter bot, I still referenced in some of my my talks, too, because it’s just it’s just, it’s amazing story of trying to do the right thing.

And, you know, it just, it just goes the wrong way very quickly.

It was it was like 24 hours, it was completely they had to remove it.

And there’s a scrubbed from the internet.

Like we don’t talk about this anymore.

We don’t talk about I can’t say that because this is a I’m making a reference to a song that my kids listen to.

You don’t talk about? Yeah, okay, you got it.

So, we were talking about, there was a couple couple things popped up.

And we want to talk about supply chain, and the conversation around supply chain and how how vital it is to today’s world.

So Can Can I have someone kind of talk about the thoughts that they wonder, Chris, you were really, really this, this, this grabbed you? So can you go a little bit deeper into what we’ve been talking about supply chain?

Christopher Penn 43:14

So here’s the thing I think is really interesting about supply chain is that you don’t realize how deep chains go, right? We typically look at the boundary of our company like okay, if I make coffee machines, here’s where my parts come from.

Okay, well, great.

Well, where did their parts come from? Where did their parts come from? At the beginning of the pandemic, one of the things that we ran out of fairly quickly, that wasn’t talked about a whole lot was it’s things like acetaminophen and ibuprofen.

Why? Because those are made in China, but the precursors to those are made in India, right.

So a lot of the pharmaceutical precursors are manufactured in India, when India first had its lockdowns that interrupted shipments to China was interrupted, and then you had shipping disruptions in in Shanghai and Shenzhen.

And that, of course, created this ripple effect.

But even something like Oh, somebody parks the cargo ship the wrong way.

And the Suez Canal for 16 days, is enough to screw up the entire planet shipping because the system itself is so brittle.

And so one of the things I thought was so interesting about the idea of blockchain built into the supply chain is that not only do you get this, you know, unalterable audit trail of stuff, but just from a beginning to end perspective, where does what’s happened along the way, because if you have insight into that into everything on where your suppliers are coming from, you can build models, you can build analyses, like, hey, Russia just illegally invaded Ukraine and 50% of the world’s neon and 10% of the world’s platinum comes from there.

What’s that going to affect and when is it going to affect us? If you know that your business was reliant on a component, and it’s seven months, you know, there’s a seven month lag and that’s up Like and, you know, in seven months, you’re gonna have a problem on your manufacturing line.

Because if you have insight to the entire supply chain right now companies don’t.

But if you have this kind of blockchain audit trail, this public ledger that opens up to being able to be much more predictive about what’s going to happen even as consumer if I, as a consumer could have access to a supply chain, right? And I know, hey, this stick of gum actually has a 12 and a half week supply chain.

I know Okay, with something’s gone wrong in that chain.

I’m gonna go out and buy, you know, extra gum now, so that I can anticipate that George, I bought a snowblower in July last year.

Why? Because I knew that lithium was having supply issues, which means that the electric lawn or the electric snowblower wanted to buy it would be out of stock by the time winter rolled around.

So my neighbors all looking at me, like, why did you buy a snowblower in July in Boston? Well, because I know the supply chains gonna be closed for months.

And it turns out, you know, when AUC by the time October rolled around, you couldn’t get snowblowers they were out because there were not enough batteries to power them.

Craig Mullins 46:07

So you should have bought a dozen and opened up snowblowers.

Steven Perva 46:12

Chris says that dangerous foresight that I wish I had, and it’s like, I I need that foresight when I plan my Disney trips, Chris, like, we need some of that.

Craig Mullins 46:22

Oh, they’ll they’ll put that on the blockchain.

So

Luke Schantz 46:28

Chris, you mentioned the word.

I think fragile or brittle.

And it is interesting that yeah, brittle because we, I feel like we’ve we’ve advanced, you know, the business science to like this height of efficiency, right, like Toyota Production System and lean systems and, you know, total quality management.

And now we’re realizing like, wow, this is that’s smart, but it’s, it doesn’t deal with the reality of it.

So where do we go from there? And is it sounds like you know, you know, maybe this is a place that, you know, AI computers,

Christopher Penn 47:00

here’s the thing, everybody seems up TPS, nobody gets TPS, right, except for Toyota, Toyota’s never said everything should be just in time and you know, when the last part runs out, the truck rolls up.

So it is said that should be the case for non essential things and that in the actual Toyota Production System, essential parts are still backlogged.

Right, there’s still you still carry inventory, you may not carry as much.

But there is absolutely, you know, some resilience in the in the original Toyota system, if you look at the way Toyota Corporation, does it, if you look at everyone’s bad implementation, because they’ve just read it an HBr business case, yeah, they made their supply chains very, very, very foolishly brittle.

Luke Schantz 47:45

That’s interesting.

And you’re right.

I mean, people, we love to kind of boil it down to a simple answer and think we’re following it.

But the devil is, is really in the details.

And I just did, I just did read one of those cases.

That’s what I was thinking about it.

It was, it was about the Kentucky plant.

And like some problem with seats in the late 90s.

With Yeah, it’s a Yeah, it’s a pain points, but they figured it out.

JJ Asghar 48:12

I just went to the office.

Luke Schantz 48:17

It’s so funny, you say that when I was reading that report, I was like, Oh, I get this joke.

And as a deeper level, that is what the TPS report was i i didn’t realize it when I was watching the film.

But yes.

Jason Juliano 48:29

I was about to say Walmart was a early adopter with the Whole Food Trust thing.

You know, just identifying, like, you know, bad food, providing more food safety, for safety for consumers.

And identifying where that bad, bad food evil like it came from.

Right.

So you came from, like, specific shipping container or specific form.

JJ Asghar 48:51

That is truly one of our best use cases.

And like, it’s so visceral, because we all need food.

Right? Like that’s, that’s the way we live as humans, is we need food, and to be able to identify using using the blockchain to figure out that it was this one in less than a couple of hours compared to the possible days, if not weeks, where you have that bad food out there.

That’s, that blows your mind? Yes.

Okay, I get it.

There’s a lot of work around it to get to that point.

But imagine with if we started getting all that visibility into the, for lack of better term observability into our supply chains to what Chris was saying earlier, you’d be able to preemptively figure a lot of this stuff out, and then, you know, rub some Kubernetes and OpenShift and some AI on top of it too.

And then all of a sudden, we’re all making scope snowboarders.com or something like that.

Christopher Penn 49:51

Yeah, I mean, if you did any kind of predictive stuff, if you have the data, right, if you have good data underpinning it, you can forecast and all For a lot of things, is just getting that data and making sure that it’s, it’s good.

That’s the hard part.

Luke Schantz 50:08

And speaking of supply chain and food, it came up in the comments here, it is worth mentioning that, you know, the, the global disruption that we’re seeing now, because of the war in Ukraine is going to severely affect wheat specifically, right, as well as fertilizers.

And this is going to be a disaster that could affect many places, their food security, mainly Africa.

So it’s worth mentioning, and it’s a solid point, but it really brings home how important you know these things.

It’s funny to you know, these things, they seem boring, like, we’re just, you know, doing some back office thing, but really, you know, figuring these problems out, we can actually have a huge impact and create a very, you know, towards sustainability again, but also just, you know, quality of life for people.

Christopher Penn 50:56

Yeah, well, it’s not just Africa, India, Indonesia, Pakistan, they consume, like 20% of Ukraine’s wheat.

When it comes to corn, for example, China takes 28% of the Ukraine’s corn.

So there’s, it’s interesting, this nation is at the heart of Agriculture and Commerce for a, basically that half of the planet.

And it’s one of those things that you don’t realize how important it is until it’s gone until it’s not operating correctly, they missed this spring harvest, their spring planting, so there will be no spring planting for all of these crops, their seed oils.

There, they’ve had taken an enormous amount of infrastructure damage for things like railways and stuff.

So they export iron ore semi finished iron products, all over Europe.

And we have not even begun to see the long term effects of this yet.

I mean, it’s interesting as, as much as, as people are looking at the sanctions on Russia, what came out of Ukraine are precursor materials for everything.

And so you have these ripple effects that again, we’re only now going to see, here’s how this one thing, this seed oil that was made in Ukraine, which would go to Pakistan to go to India, which would eventually become your burrito.

Burrito that you buy at the store is going to either be more expensive, or it’s gonna be harder to get.

But you don’t you would know this.

Now, if you had that blockchain audit trail, like here’s your burrito.

And here’s the 200 things that are connected to it to make this this microwavable burrito possible.

And it speaks to companies needs to also have the ability to spin up domestic production.

Right? Nobody had masks for what the first four months.

I remember.

I have a because I’m a bit of a weird person.

I had a bunch of supplies stockpiled in my basement.

And a friend of mine, who was a nurse said, Hey, do you have anything because we have literally no masks in our ER, like, yeah, we can have like, you know, the mid fives and stuff.

But I have military grade stuff to which doesn’t work in an ER.

And they’re like, how did you get these and like, I’ve had these for a while because I believe in, you know that things happen that are bad.

You should have stuff prepared.

But as companies as corporations, we don’t think that way we’re so used to, I’ll just go out and buy it.

Well, sometimes Russia invades you and you can’t buy it.

Jason Juliano 53:23

We got to prepare for via coproducer.

Craig Mullins 53:27

But what else you have in your basement? I’m gonna buy some of it.

Luke Schantz 53:34

I’m gonna start reading Chris’s blog.

Yeah, he knows what’s up.

Jason Juliano 53:38

I was reading a report, Chris, that I just found out a couple of weeks ago that Ukraine apparently is the biggest supplier exporter for fertilizer.

So that’s a huge disruption in our supply chain.

Christopher Penn 53:56

Yeah.

Harvard has the atlas of economic complexity.

It’s on Harvard’s website.

It’s fascinating.

Like you can bring up a visualization and see here’s exactly what this country imports and exports how much you know how many billions of dollars and you’re like, I had no idea the supply chain for that.

Just that country was that complex?

JJ Asghar 54:19

Unfortunately, there’s no real easy answer to any of this.

Like we’re, we’re just going to be affected by this situation right now.

The

Christopher Penn 54:26

easy answers don’t invade people, but Oh, yeah.

Yeah, totally.

Totally.

JJ Asghar 54:29

I didn’t take that back.

But

Elizabeth Joseph 54:32

yeah, world peace.

Let’s do it.

Yeah, there you go.

Christopher Penn 54:39

That was the joke about that.

But at the same time, one of the things that’s really not discussed enough, particularly with stuff like AI and automation, is that and I was thinking about this with the security part of today’s keynote.

We don’t really ever talk about how How to deal with bad actors getting a hold of the same technology that the good guys have, right? You know, when you think about quantum computing, right? Well as as Craig was saying, you were talking about something that can basically shatter all existing cryptography.

Right? How do you keep that out of the hands of people who would do bad things with it?

Steven Perva 55:22

Yeah, that was a good question.

I was in a, an out of band conversation with somebody else is talking about quantum safe cryptography and how people are harvesting data today with the with the intent to decrypt that data and use it later.

And I was like, how much of my data is so dynamic and move so quickly, that what they’ve gotten already is not still relevant to who I am, say, like, where I live, I don’t move all that often what my social security number is, I don’t change that I haven’t changed my email since probably the day I started it.

Right? So these are all pieces of data about me that I’m like, what does that mean, for me, as an individual with this, with what I’ll call is like, heritage data, stuff that just not going to change about who I am like, you know, that’s, that’s always something that I’m like, Oh, what is quantum safe cryptography cryptography going to do to save me from that, and probably we’ll be talking about AI is going to save me from someone’s impersonating me and someone is, is trying to do things that I typically wouldn’t do, right?

Christopher Penn 56:26

Yeah, no deep fakes are an example.

What’s what spots deep fakes right now more than anything, is when somebody does the audio wrong, right video is actually easy to easier to fake than audio.

When you look at a deep Fake, fake piece of audio, what the computers always seem to get wrong is they always ignore the non audible frequencies.

And so you can see when a piece of audio has been generated as Oh, no one paid attention to the background noise.

And as a result, it’s clearly a generated sample.

But that’s a known, you know, one of the other things that we have to deal with is Okay, open source is great, but it and it levels the playing field, which means the bad guys also have exactly access to the exactly the same tools.

JJ Asghar 57:08

That’s a conversation that comes up all the time inside of the open source fees.

Here’s where, you know, like, those bad actors, they come along.

And I mean, I make the joke that if you don’t keep keep an eye on your cloud costs, in general, it’s really easy for someone to come along with a container and start churning through for some mining of some sort.

And it’s literally a container you pull from like Docker Hub now, and it just starts churning away your money.

So you have to keep an eye on what what the usage is, where the things come from.

And that expires from open source communities where they’re like, hey, I want to make it really easy to build a bitcoin miner, or whatever, to go do those things.

To highlight the double edged sword that is open source.

Like the history guess, that kind of takes us into monetization.

I mean, I did mention Bitcoin and, you know, containers.

So Steve, you you had some you had some thoughts around monetization, didn’t you?

Steven Perva 58:12

Yeah, absolutely.

So So for me, I am, I’m always fighting this topic of modernization, especially in the mainframe space, right? People, people tend to associate the term with, with evacuating the mainframe in favor of, say, a cloud platform.

And I’m always trying to believe it or not my title back, probably just a few weeks ago, used to be modernization engineer, I’ve been going through and scrubbing that right? Because it’s no longer my title, because of that confusion is now innovation engineer, something that really, that really got me that was kind of an undertone in all the different conversations that were happening today, I think, that really excited me was this idea of, of modernization and how, how those elements of culture play into that, right, and how how people who can’t, can’t change quickly find themselves suffering.

I have a few notes here.

And as hopefully, as we dig along this conversation, I can continue to dig those up and, and make value valid points here.

But I see that I see that a lot of it was just simply, if you can’t get your your culture to today’s standards, you’re going to find that adopting new technology is going to be is going to be tough.

And and for first, especially the younger, younger folks, we’re finding these conversations like sustainability, or finding conversations like equity are things that are very, very important to us, as well as a lot of progressive other folks.

And those are conversations that we want to have today.

And we focus on those topics when we’re talking about business success, so So not only Yes, can I access my data, can I access it in real time? But is the company I’m doing business with? Is it something someone that I would want to be representative of right so so use especially with the the Ukraine conflict, you saw people calling out companies that are not ceasing operations and people are choosing not to do business with them.

This simply this does not align with who I am as an individual.

I, a business is not just the output anymore.

And I find that to be to be a really strong piece.

And I think that’s a facet of modernization, right? It’s the modern face of people how people are doing business today.

Elizabeth Joseph 1:00:26

Yeah, that was actually brought up today where they said like, it’s not just about the stockholders are related to like, your, your shareholders, right? It’s, it’s about your stakeholders.

And that includes, you know, everyone’s from your employees and your customers and the entire world.

So that was really interesting that they brought that up, too.

Steven Perva 1:00:43

Yeah.

And so kind of just back on that that security topic, right.

I think it was Seamus, who mentioned that, that security and compliance and flexibility.

They’re just not nice to haves anymore, right? So, so back in the back, when when I first started computing, cryptography was was kind of just let’s XOR a bunch of things together, and bam, it’s encrypted, right? Now we’ve got all these very elaborate encryption algorithms.

And, and it’s just not, it’s just a has to be it’s not something that we just Oh, yeah, we can encrypt the data, we might as well that way, nobody gets that.

But now that that has to be the standard for everything.

And that’s something that that people are starting to value more and more right, as I don’t recall who it was.

But they said, compliance is now a requirement.

And a breach is a is a big no go people will just not do business with anybody who’s done, who’s not keeping my data secure.

And they’re and they’ve been breached.

That’s this kind of a death knell for any company at this point.

Christopher Penn 1:01:48

Isn’t it though, I mean, if you look at the number of companies who have been breached, there’s like Target still in business, a Walmart still in business.

I think we are collected understands how important this stuff is.

But given some of the things you see, you know, the general public doing and what they value, securities, it’s an inconvenience.

And when you watch how people behave with security, you know, everything from post it notes with all their passwords on it, to, to being subject to social engineering, which I think is probably the biggest vulnerability we have.

Security is not that big of a deal outside of the people who are who get fired if it’s implemented correctly.

Right.

Elizabeth Joseph 1:02:38

It was also product, how like governments are getting into this game, too.

Like there’s laws out there now and certain countries.

So it’s not, it’s not even that people are boycotting them.

It’s you can’t do business in our country, if you’re not going to be securing the data.

And I think that has to be a really important component of this, even though it’s really inconvenient to us.

I know, when a lot of the GDPR stuff came down.

We’re all like, oh, but you know, you know, looking back at that a few years later, like, it was really good.

And I think it changed our, you know, infrastructure in our industry for the better for sure.

Craig Mullins 1:03:11

Yep.

Whenever anyone talks about regulations and compliance, I always have two questions.

What’s the penalty for not being in compliance? Who’s the police.

So you know, you can put any penalty you want on it, if you’re not policing it.

I don’t care.

So you got to have stiff penalties and good policing, and implement those penalties when you’re against it.

And unfortunately, a lot of regulations GDPR is not one of them.

But there are regulations that just don’t have any teeth to them.

You know, and I go back to this whole sustainability thing.

It’s great, you know, we want to be sustainable.

And you mentioned, you know, that during the conference, they said, it’s not just your shareholders, it’s your stakeholders, and it’s a public at large.

And it’s like, If only that were true, I really wish that were the case.

But it’s all greed.

You know, maybe I’m just an old, Cranky man who looks at what’s going on in the world and saying, you know, that company is going to do what puts dollars in its pockets.

And that’s it.

And so unless we figure out a way to make sustainability, put dollars in the company’s pockets, it’s not gonna happen.

Christopher Penn 1:04:26

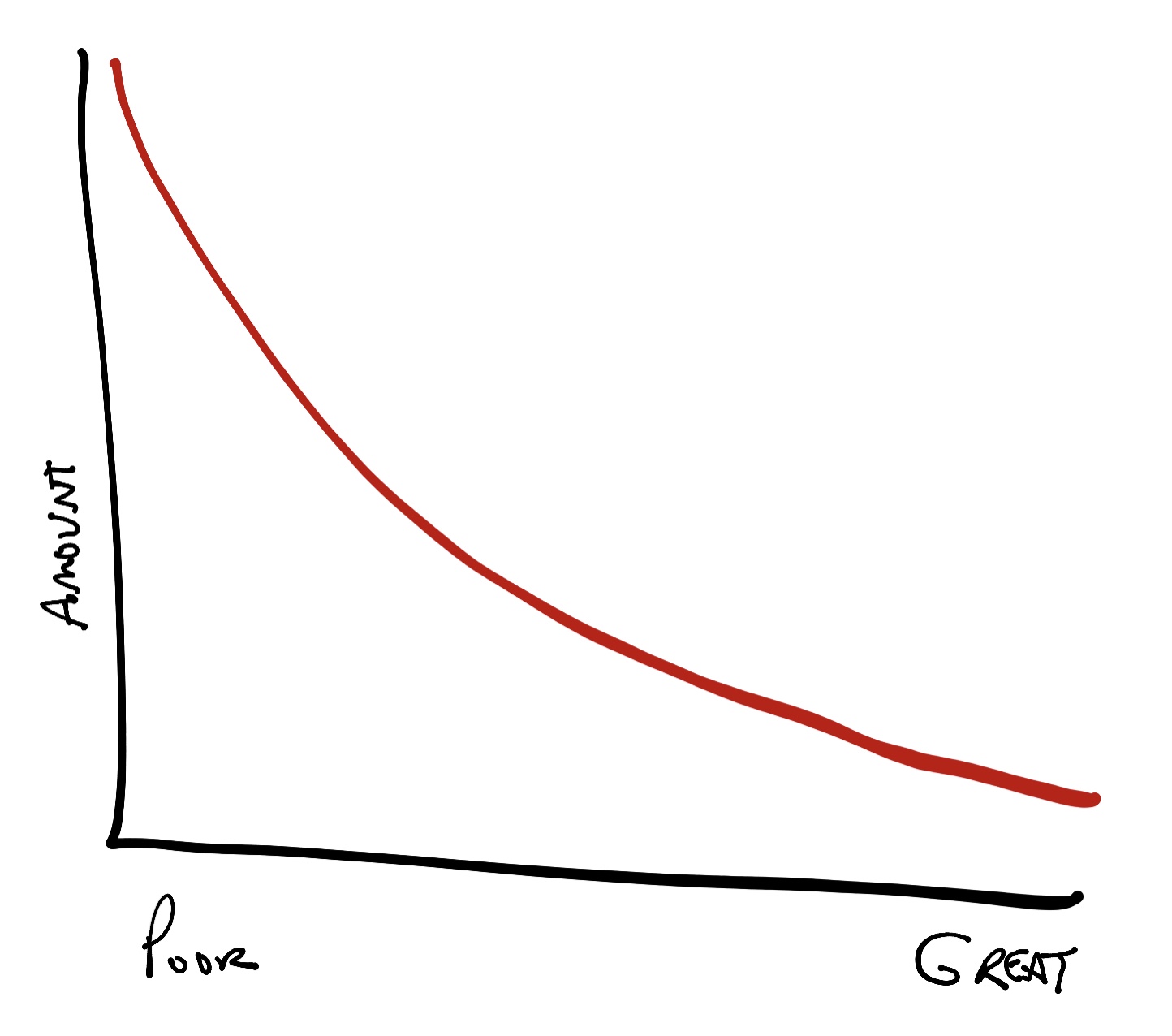

Yeah, it’s absolutely true.

If you look at the stats, that they’re saying, No, where the push comes from is from the consumer.

If the consumer says I will buy the brand, that is sustainable, and I will pay more for the sustainable brand.

If if there’s enough of that a company will say in its own rational interest, okay, let’s make our products sustainable because we can get a net a higher net profit margin off of being sustainable than not, but that’s where it’s got to come from.

Craig Mullins 1:04:54

True.

And that’s a first world solution.

I mean, you’re talking about people who are wealthy enough to pay more There are people who are not wealthy enough to pay more.

And they’re always going to be going to Walmart to get that cheap shirt.

And who can blame them? Because that’s what they can afford.

So getting greed out of it is tough.

And, you know, I, I’m pulling for it to happen.

But I’m very, very skeptical.

Steven Perva 1:05:23

Yeah, I

JJ Asghar 1:05:24

think, correct.

Could we have a comment from the audience about what you’ve been saying, which is, oh, this is a reactive way to do the business.

I’d like to see companies do the right thing, because it’s the right thing to do.

Craig Mullins 1:05:35

I like that tuning.

I, but but that is not what is going to drive shareholder value.

That’s not what is going to get the board of directors to keep the CEO in business.

It just, it just isn’t.

So hopefully, we see the you know, things change.

And you look at when you look at sustainability as an overall issue.

It’s like, what’s the future of the earth? And that’s when it becomes a political issue.

And I have no earthly idea how it ever became a political issue.

But it’s like, if you have children, you should care about sustainability.

What’s going to happen to your child when you’re dead? Do you want them to fry up? Or do you want them to live? And it’s as simple as that.

But unfortunately, greed of people who live right now, sometimes is more important than worrying about people who are going to be living 5080 years from now,

Christopher Penn 1:06:41

one thing that is common here, though, that I think is worth pointing out is companies and countries have no morals, they have no morals whatsoever.

They only have self interest.

No country ever does something just because it’s the right thing to do.

Countries behave in their in self interest.

The world is reacting to Ukraine, not because it’s the right thing to do.

But because it is in our self interest to have a weakened Russia, right is in our self interest heavy Russia is military and capable of conquering its neighbors that is a self interested thing to do.

And you’ll notice that like when people watch the reactions, it was only until day three, when it was pretty clear, oh, Russia is not going to steamroll Ukraine.

In fact, Ukraine is gonna punch them in the mouth repeatedly, that there was like, hey, this could work to our advantage.

Sustainability is the same thing.

We don’t we as people will make moral choices when we buy big companies, the company does not have morals, the company only has self interests.

And we have to figure out how to make sustainability in the self interest of the company to say like, yes, let’s do this, because it’s profitable.

And, and we can make it work.

AI comes in good girl.

Gabriela de Queiroz 1:07:53

Now, that’s a very complicated discussion here.

And I think it’s also like cultural change.

And there are so many implications.

And one thing that we haven’t talked yet in Luke and JJ, I’m kind of like, going ahead, but one of the things I think we should talk that we didn’t talk about think is the whole Arvon, Keynote, and everything that he talked about, you know, the takeaways on successful leadership and transformation during these times, right.

So I would love for us to address a little bit on that topic, because it was, at least for me, it was such an important topic that he was talking and discussing.

And it’s something that we see in companies and the whole in the whole environment right now.

It’s like, how do you scale? You know, how do you deploy? How do you need to make sure that there is a scale on the leadership to in order to do that the other thing that he just said, I think it was very interesting.

It’s like, it has became a world of show, don’t tell, right.

And then he said, you know, we need to transform the organization and to be like doors.

So we need to work with somebody, we need to work with others, we need to work with partners.

And also important point is like, we need to give the credit, who it belongs to, like belongs to the partner, and in and so on, and he talks about teamwork, so I felt like it was so different to hear from him.

And not different in a way that I was not expecting but different because he touched on very important pieces that we don’t see a leader talking much specially about people about teamwork about being Doer about giving credit, so I thought it was fantastic.

JJ Asghar 1:09:48

It’s takes a little extra, right? It takes it takes a village to be successful.

And that’s what that’s what everyone was saying.

And from what I got out of it, which was, you know, we all we all have to meet at the same same field to, you know, build the barn or whatever I’m, I’m extending this metaphor way too far.

Gabriela de Queiroz 1:10:08

And exactly, and it’s not only about tools, right? No matter like the tools that we have, or like, we can talk about the whole hybrid cloud, like when we expanded.

And now we don’t need to work only with IBM, we can have, you know, the compatibility to work with different providers, right.

So like, it’s a team that takes you to make the transformation.

Elizabeth Joseph 1:10:30

Yeah, and it also came up in the discussion with Red Hat as well, where they brought up open source software and how, you know, things like Linux and Kubernetes, which OpenShift is built on are, you know, they’re the communities that developed that open source software, it’s more than one company can do.

And that’s really where the value comes from, is like, so many people out there working on this, who have different interests, and different different goals, have really built some remarkable things out there in the open source world that we’ve, you know, then gone on to build products on, and we couldn’t have done it without them.

Craig Mullins 1:11:02

And this is really all part of IBM’s, let’s create campaign, which I think was brilliant.

I mean, it’s a really great way of defining the company, as you know, what do we do we help you create, and it’s not just us, we bring in this whole community, to help you create, and then you become part of that community as you create.

It’s a great message.

Yeah,

Gabriela de Queiroz 1:11:25

so he says, like, you know, we have partners, we bring open source, you know, we invite the clients, so it’s, it’s such a different speech, from, from what I’ve seen in the past, right?

Jason Juliano 1:11:39

It’s really changing the mindset of, you know, everyone’s culture, right.

So to co create and CO collaborate with internal team members, partners, suppliers,

Steven Perva 1:11:51

something that Arvin mentioned was any very briefly said something about taking the ego out of it, I thought that was really profound.

That’s something that’s really important to me, especially when you collaborate, you know, with with coworkers, colleagues, especially when you work, cross generational working with people that are, that are of a different generation for you, taking the ego out of that, and having, having that respect for one another.

And I think, I think to kind of, hopefully tie it back and in some way to the to the point we were just talking about is this democratization of of the way we do things.

That’s, that’s huge.

I think it empowers individuals to get to get involved in in solutioning.

Together, that lead somebody who’s who’s not maybe affiliated with a large company that has the talent to to contribute to open source to make their voice heard right to say, Yeah, you know, Chris had mentioned that consumers and countries may be self interested.

But if we’re all involved in these open source initiatives, we can have our voices heard in that regard, as well, without relying on the corporate machine to do all the work for us, right.

I think that’s really important.

Christopher Penn 1:13:02

Let’s create some fun thing too, because for years, decades, IBM has been like, hey, buy our thing, right? Hey, here’s, here’s a new piece of iron, buy our thing.

And it’s like, you know, the the appliance store, hey, buy our new blender.

And let’s create says, Hey, why don’t you try cooking, and oh, by the way, you’re gonna need appliances, and IBM will provide them it’s a smarter way of saying, let’s create stuff together.

And you’re going to need chefs and recipes and ingredients and appliances, probably from IBM as it’s a better way to thinking about it.

Elizabeth Joseph 1:13:34

And having studied like mainframe history myself, like, it’s a throwback to what we’ve always done.

I mean, the share organization has been around since the 1950s.

And that’s an organization of like minded folks in the industry who brought socialist IBM and the IBM like, Oh, that’s a good idea.

Let’s do that.

So it’s kind of coming full circle.

And of course, that organization still exists to

Craig Mullins 1:13:55

marketing.

You don’t talk about the product, you talk about the aspiration, right? Nike isn’t saying buy our shoes.

They’re saying, Here’s Michael Jordan, look at the shoes he’s wearing.

JJ Asghar 1:14:06

Yeah, the ability to offer open open source and how IBM encourages open source work.

And us, as open source developer advocates are in that space.

We actually get to be there with that part of the community and be able to say, hey, we can we are encouraged to be part of the external communities and create that thing.

It there’s a Venn diagram there, that where that intersection happens.

We can say yes, of course, like you’re planning on going down this path.

OpenShift can actually make your life great.

But by the way, I’ve actually committed to OpenShift, right, like I actually understand that this can be part of your value prop.

And that’s so empowering to you on this point.

It’s a major change and for IBM and it’s only only for the better

Luke Schantz 1:15:02

And it’s interesting the mechanism, right that all of these companies that have sort of, you know, a company mission, and they need to make profit and do that thing, but they choose to be part of foundations and organizations that have rules and codes of conduct.

And, and, and part of it is they will benefit in the long run, but it’s that process is something we can feel better about.

And it’s very interesting to to hear that, like, you know, other aspects like now attracting the talent that you’re going to want to work at your company.

If you don’t have these values, you know, you might think you’re making that, you know, Beeline right towards the fastest profit and the minimizing costs.

But if you don’t do it in the right way, your customers are going to abandon you and you’re not going to be able to have the employees, they don’t want to work that way.

Exactly.

Steven Perva 1:15:50

I think a good point to that to mention is is I don’t recall who it was.

But somebody had said that the pandemic was, I think it was the gentleman Amir from from discover that said that the pandemic was not just a disruption, but it was really just an opportunity for us all to learn.

And I think I think we’re seeing the consequence of that as well.

I’m, I’m fully remote, right, you’re really empowering people to live their lives and be individuals outside of their corporate identity.